091. Cleaning Up Longhorn and Vista

“Windows Ultimate Extras are programs, services, and premium content for Windows Vista™ Ultimate. These features are available only to those who own a copy of Windows Vista™ Ultimate.” —box copy

Whenever you take on a new role you hope that you can just move forward and start work on what comes next without looking back. No job transition is really like that. In my case, even though I had spent six months “transitioning” while Windows Vista went from beta to release, and then even went to Brazil to launch Windows Vista, my brain was firmly in Windows 7. I wanted to spend little, really no, time on Windows Vista. That wasn’t entirely possible because parts of our team would be producing security and bug fixes at a high rate and continuing to work with OEMs on getting Vista to market. Then, as was inevitable, I was forced to confront the ghosts of Windows Vista and even Longhorn. In particular, there was a key aspect of Windows Vista that was heavily marketed but had no product plan and there was a tail of Longhorn technologies that needed to be brought to resolution.

Back to 090. I’m a Mac

Early in my tenure, I received an escalation (!) to “fund” Windows Ultimate Extras. I had never funded anything before via a request to fund so this itself was new, and as for the escalation. . . I had only a vague idea what Ultimate Extras were, even though I had recently returned from the Windows launch event in Brazil where I was tasked with emphasizing them as part of the rollout. The request was deemed urgent by marketing, so I met with the team, even though in my head Vista was in the rearview mirror and I had transitioned to making sure servicing the release was on track, not finishing the product.

The Windows Vista Ultimate SKU was the highest priced version of Windows, aimed primarily at Windows enthusiasts and hobbyists because it had all the features of Vista, those for consumers, business, and enterprise. The idea of Ultimate Extras was to “deliver additional features for Vista via downloadable updates over time.” At launch, these were explained to customers as “cutting-edge features” and “innovative services.” The tech enthusiasts who opted for Ultimate, for a bunch of features that they probably wouldn’t need as individuals, would be rewarded with these extra features over time. The idea was like the Windows 95 Plus! product, but that was an add-on product available at retail with Windows 95.

There was a problem, though, as I would learn. There was no product plan and no development team. The Extras didn’t exist. There was an Ultimate Extras PUM, but the efforts were to be funded by using cash or somehow finding or conjuring code. This team had gotten ahead of itself. No one seemed to be aware of this and the Extras PUM didn’t seem to think this was an issue.

As the new person, this problem terrified me. We shipped and charged for the product. To my eyes the promise, or obligation if one prefers, seemed unbounded. These were in theory our favorite customers.

The team presented what amounted to a brainstorm of what they could potentially do. There were ideas for games, utilities, and so on. None of them sounded bad, but none of them sounded particularly Ultimate, and worse: None existed.

We had our first crisis. Even though this was a Vista problem, once the product released everything became my problem.

The challenge with simply finding code from somewhere, such as a vendor, licensing from a third party, or something laying around Microsoft (like from Microsoft Research), was that the journey from that code to something shippable to the tens of millions of customers running on a million different PC configurations, in 100 languages around the world, and also accessible, safe, and secure, was months of work. The more content rich the product was, in graphics or words, the longer and more difficult the process would be. I don’t know how many times this lesson needed to be learned at Microsoft but suffice it to say small teams trying to make a big difference learned it almost constantly.

And then there was the issue of doing it well. Not much of what was brainstormed at the earliest stages of this process was overly compelling.

With nothing particularly ultimate in the wings, we were poised for failure. It was a disaster.

We set out to minimize the damage to the Windows reputation and preserve the software experience on PCs. Over the following months we worked to define what would meet a reasonable bar for completing the obligation, unfortunately I mean this legally as that was clearly the best we could do. It was painful, but the prospect of spinning up new product development meant there was no chance of delivering for at least another year. The press and the big Windows fans were unrelenting in reminding me of the Extras at every encounter. If Twitter was a thing back then, every tweet of mine would have had a reply “and…now do Ultimate Extras.”

Ultimately (ha), we delivered some games, video desktops, sound schemes, and, importantly, the enterprise features of Bitlocker and language packs (the ability to run Vista in more than one language, which was a typical business feature). It was very messy. It became a symbol of a lack of a plan as well as the myth of finding and shipping code opportunistically.

Vista continued to require more management effort on my part.

In the spring of 2007 shortly after availability, a lawsuit was filed. The complaint involved the little stickers that read Windows Vista Capable placed on Windows XP computers that manufacturers were certifying (with Microsoft’s support) for upgrade to Windows Vista when it became available. This was meant to mitigate to some degree the fact that Vista missed the back to school and holiday selling seasons by assuring customers their new PC would run the much publicized Vista upgrade. The sticker on the PC only indicated it could run Windows Vista, not whether the PC also had the advanced graphics capabilities to support the new Vista user experience, Aero Glass, which was available only on Windows Vista Home Premium. It also got to the issue of whether supporting those features was a requirement or simply better if a customer had what was then a premium PC. The question was if this was confusing or too complex for customers to understand relative to buying a new PC that supported all the features of Vista.

![Email threads: From: Steve ballmer Sent: Sunday, February 1B, 2007 12:10 PM To: Steven Sinofsky Cc: Bill Veghte: Jon Devaan Subject: RE: Vista Righlo thanks From: Steven Sinnick 10/4/2007 Page 34 MS-KELL 000000019347 CONFIDENTIAL Case 2:07-cv-00475-MJP Document 131 Filed 02/27/2008 Page 32 of 158 Sent: Sunday, February 18, 2007 12:08 PM To: Steve Hallmer cc: bi veante: Jon Devaan эиресть ке: vIsa inink tolks are workino on ihis now and we inst nead time Basically I think three things worked against us: No one really believed we would ever ship so they didn't start the work until very late in 2006. This led to the lack of availability. For example my home multi-funclion printer did not have drivers until 2/2 and even pulled their 1/30 drivers and re-released them (Brother). Massive change in the underpinnings for video and audio really led to a poor experience at RTM, especially with respect to Windows Media Center. This change led to incompatibilities. For example, you don't get Aero with an XP driver, but your card might not (ever) have a Vista driver. A lot of change led to many windows XP drivers not really working at all - this is across the board for printers, scanners, wan, accessories (fingerprint readers, smartcards. tv tuners) and so on. This category is due to the fact that many of the associated applets don't run within the constraints of the security model or the new video/audio driver models. For examole. Orlando is not on Vista because there are no drivers for his Verizon card vet. Microsoft's own hardware was missing a lot of support (fingerprint reader, MCE extender, etc.) People who rely on using all the features of their hardware (like Jon's Nikon scanner) will not see availabilily for some time. if ever, dependino on the mfo. The built-in drivers never have all the features but do work. For example. could print with by Brother prinker and use il as a stand-alone fax. But network setup, scanning, print to fax must come from Brother. The Vista Ready logo program required drivers available on 1/30. I think we had reasonable coverage but ouality was uneven as ' eynerienred Intel has the biggest challenge. Their '945" chipset which is the baseline Vista set "barely" works right now and is very broadly used. The "915" chipset which is not Aero capable is in a huge number of laptops and was lagged as "Vista Capable" but not Visla Premium. I don't know if this was a good call. But these function but will never be great. Even the 945 set has new builds of drivers coming out constanily bul hones are on the nay chinset rather than this ans The oint Jim had of declaring a Release Candidate was because he sensed peoole were not really workino under a deadline in the ecosystem. This heloed even though we knew we had more work to do on our side. So far I am sumorsed al the low call volume in pSS. 1 think we have a lot of new PCs which helps and the hobbyist people who bought FPP/UPG just know what to do and aren'l calling, but I know they are struggling. All of this is why we need much more clarity and focus at events like WinHFC. We need to be clearer with industry and we need to decide whal we will do and do that well and 100% and not just do a little of evervihing which leaves the Irl in a confused sista Case 2:07-cv-00475-MJP Document 131 Filed 02/27/2008 Page 33 of 158 From: Steven Sinos Sent: Sunday, February 18, 2007 11:47 AM Tor Stave Balmer Cc. Bill Veghte Subject: RE: Vista This is the same across the whole ecosystem From: Steve Ballmer Sent: Sunday, February 18, 2007 11;45 AM To: Jon Shirley (MS Board of Directors) Cc: Bill Veghte Subject: RE: Vista Thanks much will get after Nikon From: Jon Shirley [mailto:JonS@orchard.ms] senc: sunday, reorary Id. 2007 11:44 AM To: Crave Ballmer Subject: RE: Vista Eoson iust added to its web site the drivers I need from them. Nikon is still showing that they are studying the issue. In deneral we do not provide scanner drivers and when we do they are very simple and not comprehensive enough. Our printer drivers are Ok but often the manufacturer includes a lat marg I should have added that 1 like Vista very much on the machine it is running on From: 5teve Ballmer [mallto:Steve. Ballmer@microsoft.comi Sent: Sunday, February 18, 2007 11:40 AM To: Jon Shirley Subier- RE. Vish You are right that people did not trust us have you checked windows update I assume you found no drivers there either?? Thanks Email threads: From: Steve ballmer Sent: Sunday, February 1B, 2007 12:10 PM To: Steven Sinofsky Cc: Bill Veghte: Jon Devaan Subject: RE: Vista Righlo thanks From: Steven Sinnick 10/4/2007 Page 34 MS-KELL 000000019347 CONFIDENTIAL Case 2:07-cv-00475-MJP Document 131 Filed 02/27/2008 Page 32 of 158 Sent: Sunday, February 18, 2007 12:08 PM To: Steve Hallmer cc: bi veante: Jon Devaan эиресть ке: vIsa inink tolks are workino on ihis now and we inst nead time Basically I think three things worked against us: No one really believed we would ever ship so they didn't start the work until very late in 2006. This led to the lack of availability. For example my home multi-funclion printer did not have drivers until 2/2 and even pulled their 1/30 drivers and re-released them (Brother). Massive change in the underpinnings for video and audio really led to a poor experience at RTM, especially with respect to Windows Media Center. This change led to incompatibilities. For example, you don't get Aero with an XP driver, but your card might not (ever) have a Vista driver. A lot of change led to many windows XP drivers not really working at all - this is across the board for printers, scanners, wan, accessories (fingerprint readers, smartcards. tv tuners) and so on. This category is due to the fact that many of the associated applets don't run within the constraints of the security model or the new video/audio driver models. For examole. Orlando is not on Vista because there are no drivers for his Verizon card vet. Microsoft's own hardware was missing a lot of support (fingerprint reader, MCE extender, etc.) People who rely on using all the features of their hardware (like Jon's Nikon scanner) will not see availabilily for some time. if ever, dependino on the mfo. The built-in drivers never have all the features but do work. For example. could print with by Brother prinker and use il as a stand-alone fax. But network setup, scanning, print to fax must come from Brother. The Vista Ready logo program required drivers available on 1/30. I think we had reasonable coverage but ouality was uneven as ' eynerienred Intel has the biggest challenge. Their '945" chipset which is the baseline Vista set "barely" works right now and is very broadly used. The "915" chipset which is not Aero capable is in a huge number of laptops and was lagged as "Vista Capable" but not Visla Premium. I don't know if this was a good call. But these function but will never be great. Even the 945 set has new builds of drivers coming out constanily bul hones are on the nay chinset rather than this ans The oint Jim had of declaring a Release Candidate was because he sensed peoole were not really workino under a deadline in the ecosystem. This heloed even though we knew we had more work to do on our side. So far I am sumorsed al the low call volume in pSS. 1 think we have a lot of new PCs which helps and the hobbyist people who bought FPP/UPG just know what to do and aren'l calling, but I know they are struggling. All of this is why we need much more clarity and focus at events like WinHFC. We need to be clearer with industry and we need to decide whal we will do and do that well and 100% and not just do a little of evervihing which leaves the Irl in a confused sista Case 2:07-cv-00475-MJP Document 131 Filed 02/27/2008 Page 33 of 158 From: Steven Sinos Sent: Sunday, February 18, 2007 11:47 AM Tor Stave Balmer Cc. Bill Veghte Subject: RE: Vista This is the same across the whole ecosystem From: Steve Ballmer Sent: Sunday, February 18, 2007 11;45 AM To: Jon Shirley (MS Board of Directors) Cc: Bill Veghte Subject: RE: Vista Thanks much will get after Nikon From: Jon Shirley [mailto:JonS@orchard.ms] senc: sunday, reorary Id. 2007 11:44 AM To: Crave Ballmer Subject: RE: Vista Eoson iust added to its web site the drivers I need from them. Nikon is still showing that they are studying the issue. In deneral we do not provide scanner drivers and when we do they are very simple and not comprehensive enough. Our printer drivers are Ok but often the manufacturer includes a lat marg I should have added that 1 like Vista very much on the machine it is running on From: 5teve Ballmer [mallto:Steve. Ballmer@microsoft.comi Sent: Sunday, February 18, 2007 11:40 AM To: Jon Shirley Subier- RE. Vish You are right that people did not trust us have you checked windows update I assume you found no drivers there either?? Thanks](https://substackcdn.com/image/fetch/$s_!jCZr!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fa8a872fd-eb75-4ba8-82a5-648a974e4885_4249x2734.png)

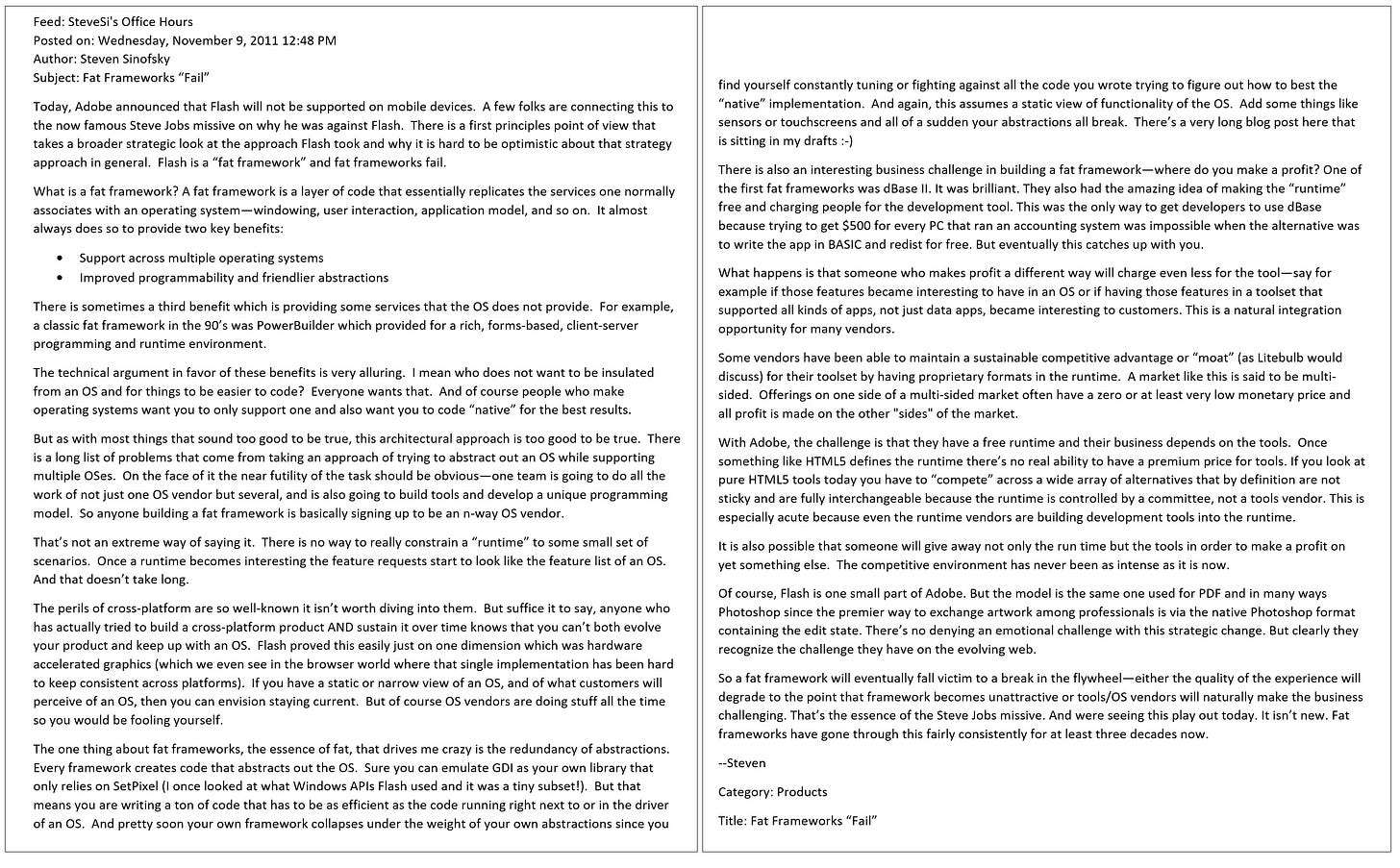

A slew of email exhibits released in 2007 and 2008 showed the chaos and tension over the issue, especially between engineering, marketing, sales, lawyers, and the OEMs. One could imagine how each party had a different view of the meaning of the words and system requirements. I sent an email diligently describing the confusion, which became an exhibit in the case along with emails from most every exec and even former President and board member Jon Shirley (JonS) detailing their personal confusion.

The Vista Capable challenge was rooted in the type of ecosystem work we needed to get right. Intel had fallen behind on graphics capabilities while at the same time wanted to use differing graphics as part of their price waterfall. Astute technical readers would also note that Intel’s challenge with graphics was rooted deeply in their failure to achieve critical mass for mobile and the resulting attempt to repurpose their failed mobile chipsets for low-end PCs. PC makers working to have PCs available at different price points loved the idea of hardline differentiation in Windows, though they did not like the idea having to label PCs as basic, hence the XP PCs were labeled “capable.” Also worth noting was that few Windows XP PCs, especially laptops, were capable of the Home Premium experience due to the lack of graphics capabilities. When Vista released, new PCs would have stickers stating they ran Windows Vista or Windows Vista Basic, at least clarifying the single sticker that was placed on eligible Windows XP computers.

Eventually, the suit achieved class-action status, always a problem for a big company. The fact that much of the chaos ensued at the close of a hectic product cycle only contributed to this failure. My job was to support those on the team that had been part of the dialogue across PC makers, hardware makers, and the numerous marketing and sales teams internally.

The class-action status was eventually reversed, and the suit(s) reached a mutually agreeable conclusion, as they say. Still, it was a great lesson in the need to repair both the relationships and the communication of product plans with the hardware partners, not to mention to be more careful about system requirements and how features are used across Windows editions.

In addition to these examples of external issues the Vista team got ahead of itself regarding issues related to code sharing and platform capabilities that spanned multiple groups in Microsoft.

The first of these was one of the most loved modern Windows products built on top of Windows XP, Windows Media Center Edition (WMC, or sometimes MCE). In order to tap into the enthusiasm for the PC in the home and the convergence of television and PCs, long before smartphones, YouTube, Netflix, or even streaming, the Windows team created a separate product unit (rather than an integrated team) that would pioneer a new user interface, known as the 10-foot experience, and a new “shell” (always about a shell!) designed around using a PC with a remote control to show live television, home DVD discs, videos, and photos on a big screen, and also play music. This coincided with the rise in home theaters, large inexpensive disk drives capable of storing a substantial amount of video, camcorders and digital cameras, and home broadband internet connections. The product was released in 2002 and soon developed a relatively small but cultlike following. It even spawned its own online community called “Green Button,” named after the green button on the dedicated remote control that powered the shell’s 10-foot user interface.

The product was initially sold only with select PCs because of the need for specific hardware capabilities. Later, with Windows Vista (and Windows 7), WMC was included in the premium editions. The usage based on both sales and the telemetry collected anonymously was low and the repeat usage was a small fraction of even those customers. Nevertheless, there were vocal fans, and we had no plans to give up.

WMC was hitting real challenges in the market, though, especially in the United States, where television was moving from analog CATV to digital, and with digital came required set-top boxes and a new and not quite available technology called CableCARD, required to decrypt the cable signal. Not only did this make things difficult for WMC, but it made things difficult for anyone wanting to view cable tv, as if the encrypted analog channels were not difficult enough already. Everyone trying to use CableCARD had a story of trying to activate the card that included essentially a debug interface, typing in long hex strings, awaiting a “ping” back from the mysterious “head end.” The future for the innovative TV experience in WMC was looking bleak.

Additionally, WMC was bumping up against the desires of the Xbox team to expand beyond gaming. The Xbox team had recently unveiled a partnership with the new Netflix streaming service to make it available on Xbox.1 Some of the key leaders on WMC had moved from Windows to the Xbox organization and began to ask about moving the WMC team over with them.

At the time, I was up to my elbows in looking at headcount and orgs and was more than happy to move teams out of Windows, especially if it was straightforward, meaning they could just pick up the work and there was no shipping code being left behind unstaffed. This quickly became the first debate, in my entire time at Microsoft, over headcount and budgets because the destination organization was under tight revenue and expense review.

The WMC team was, surprisingly, hundreds of people, but it also had dependencies on numerous other teams across networking, graphics, and the core user experience. We could easily move the core WMC team but getting a version of WMC integrated with the new engineering system and to-be-developed delivery schedule (which we were planning) was a concern. Of course the team wanted to move to Xbox but had little interest in delivering WMC back to Windows, especially as the overall engineering process changed. They literally thought we would just move all the headcount and team and then create a new WMC team. They had awful visions of being a component on the hook to meet a schedule that was unappealing. We could not just give up on WMC, even with such low usage, without some sort of plan.

I learned that moving humans and associated budgets was fine. But CollJ had been working with finance on headcount and was told we had to also move revenue, something I had never heard of. I had no idea how that might even work. In Vista, MediaCenter was part of the Home Premium and Ultimate SKU, and no longer a separate product like it was for Windows XP. How could one arrive at the revenue for a single feature that was part of a SKU of Vista? Perhaps back when WMC was a separate product this made sense, but at the time it seemed like an accounting farce. In fact, the judge in the Vista Capable lawsuit even removed class action status because of an inability to determine which customers bought Windows Vista because of which premium features.

Microsoft had been divided into seven externally reported business segments; each quarterly earnings filing with the SEC reported a segment as though it were an independent business. The result of this was more visibility for the financial community, which was great. Internally these segments did not line up with the emphasis on code-sharing, feature bundling, or shared sales efforts. For example, from a product development perspective there was a lot of code sharing across all products—this was a huge part of how Microsoft developed software. Costs for each segment could never accurately reflect the R&D costs. An obvious example was how much of Windows development could/would/should be counted in the Server and Tools segment, given that so much of the server product was developed in the Windows segment.

My view was that there was a $0 revenue allocation for any specific feature of Windows—that was the definition of product-market fit and the reality that nobody bought a large software product for any single feature. This was always our logic in Office, even when we had different SKUs that clearly had whole other products to justify the upsell. Office Professional for years cost about $100 more than Office Standard simply for the addition of the Access database. We never kidded ourselves that Access was genuinely worth billions of dollars on its own.

Over several weeks, however, we had to arrive at some arbitrary number that satisfied finance, accounting, tax, and regulatory people. To do this, we moved the hundreds of people working on WMC to XBox along with a significant amount of Windows unit revenue per theoretical WMC customer. It wasn’t my math, but it added up to a big number moving to the Entertainment segment. In exchange, we hoped we would get back a WMC that had improved enough to satisfy an enthusiastic fanbase, knowing that the focus was shifting to Xbox.

Given the fanbase externally, including several of the most influential Windows writers in the press, there was a good chance that such a seemingly arbitrary organization move would leak. The move would be viewed by many as abandoning WMC. I used an internal blog post to smooth things over, describing my own elaborate home audio/video system, which included Windows Vista Ultimate and pretty much every Microsoft product. In doing this, I chalked up a ton of points from tech enthusiast employees, showing that I had a knee-deep knowledge of our products—something most assumed an Office person wouldn’t have, perhaps like BillG not understanding why a C++ developer knew Excel 15 years earlier when I first interviewed to work for him. In practice, my post made it clear that keeping this technology working to watch TV casually at home was impossible. I hated my home setup. It was ridiculous.

As part of the final Longhorn cleanup, I also needed to reconcile the strategic conflicts between the Windows platform and the Developer Tools platform, and as the new person I found myself in the middle. It was a battle that nobody wanted to enter for fear of the implications of making the wrong technology bet.

The Developer division created the .NET platform starting in the early 2000s to build internet-scale, web server applications delivered through the browser primarily to compete with Java on the server. It was excellent and remains loved, differentiated, and embraced by the corporate world today. It crushed Java in the enterprise market. It was almost entirely responsible for the success Windows Server saw in the enterprise web and web application market.

The .NET client (desktop programs one would use on a laptop) programming model was built “on top” of the Windows programming model, Win32, with little coordination or integration with the operating system or the .NET server platform. This created a level of architectural and functional complexity along with application performance and reliability problems resulting in a messy message to developers. Should developers build Win32 apps or should they build .NET apps? While this should not have been an either/or, it ended up as such because of the differing results. Developers wanted the easier-to-use tools and language of .NET, but they wanted the performance and integration with Windows that came from Win32/C++. This was a tastes great, less filling challenge for the Developer division and Windows. In today’s environment, there are elements of this on the Apple platforms when it comes to SwiftUI versus UIKit as searching for that debate will find countless blog posts on all sides.

It was also a classic strategic mess created when a company puts forth a choice for customers without being prescriptive (or complete). Given a choice between A and B, many customers naturally craft the third choice, which is the best attributes of each, as though the code was a buffet. Still other customers polarize and claim the new way is vastly superior or claim the old way is the only true way and until the new way is a complete superset they will not switch. Typical.

Longhorn aimed to reinvent the Win32 APIs, but with a six-year gap from Windows XP it was filled by the above .NET strategy when it came to Microsoft platform zealots. The rest of the much larger world was focused on HTML and JavaScript. At the same time, nearly all commercial desktop software remained Win32, but the number of new commercial desktop products coming to market was diminishingly small and shrinking. Win32 was on already life support.

The three pillars of Longhorn, WinFS, Avalon, and Indigo, failed to make enough progress to be included in Vista (together these three technologies were referred to as WinFX.) With Vista shipping, each of these technologies found new homes, or were shut down. I had to do this last bit of Vista cleanup which lingered long after the product was out the door.

WinFS receded into the world of databases where it came from. As discussed, it was decidedly the wrong approach and would not resurface in any way. Indigo was absorbed into the .NET framework where it mostly came from. Avalon, renamed Windows Presentation Foundation (WPF), remained in the Windows Client team, which meant I inherited it.

![Through the Open Source Lens WE ASKED TWO OPEN SOURCE LEADERS - BRENDAN EICH, CHIEF ARCHITECT of Mozilla, and Miguel de Icaza, CTO of Novell's Ximian services business unit - for their perspectives on Longhorn's Avalon presentation subsystem. IW: What's your take on Avalon? BE: Microsoft's doing things that are valid according to their business interests and also, in general engineering terms, with an eye toward the [professional developers]. They have to keep them happy, give them the tools they want, keep them hooked on the next version of the OS. IW: Of course, a lot of those folks tell us that the browser and the Web are their bread and butter. BE: That's my fond hope, too. I'm sure there will always be certified Windows developers. But I do wonder if they'll have trouble convincing people to migrate and pay large costs to reinvest in redoing things - especially if they're not sup-porting the Web well and if people find the Web to be lower cost yet still adequate for presentation. "Avalon will be a lot easier to write than the previous ActiveX 1) • Brendan Eich, Mozilla Mdl: Avalon is a very extensive API, but while there is a lot of abstraction, there is not enough encapsulation. It's a high-level standard toolkit. The problem we have today with Unix toolkits, Mac OS toolkits, and Windows toolkits is that we are still using the same controls. Developers and designers are building applications in terms of the following items: scroll bars, enter lines, buttons, text entries, radio buttons, pop-up menus, combo boxes. Avalon is not pre-senting us with new controls or innovative ways of dealing with large volumes of data. And yet this massive API says you have to be completely bound to a particular version of the .Net Framework. This is not the approach the Web has taken, which is that a table or button can be rendered in different ways appropriate to the platform. BE: That's right. If you look at XAML's style language, they really muddle the presentation /structure separation. Mdl: Avalon is the next ActiveX. One thing that is a problem when trying to do Linux desktop rollouts is that companies often have a few proprietary ActiveX components. Avalon will be a lot easier to write than the previous ActiveX: it's a lot prettier, so when organizations are using Longhorn-based machines, which 1 assume will be sold everywhere by 2008, it's going to be increasingly hard for the rest of us to get there unless we have an implementation of an equivalent technology. So, eventually somebody will implement that, whether as part of the Mono project or a separate project. IW: It's been argued that because there are 15 ways people have approached XUL [Extensible User Interface Language], Flex, XAML, whatever - and you can't reconcile them - maybe it's time for a de facto standard implementation. BE: XAML is not that thing, though, because, as Miguel says, they've bound it too tightly to their class structure. And that surprises me because they should have institutional memory of all the versions of OLE and all the hell they had to go through in terms of compatibility glue. Do they want to do that again? - J.U. INFOWORLD.COM 07.19.04 41 Through the Open Source Lens WE ASKED TWO OPEN SOURCE LEADERS - BRENDAN EICH, CHIEF ARCHITECT of Mozilla, and Miguel de Icaza, CTO of Novell's Ximian services business unit - for their perspectives on Longhorn's Avalon presentation subsystem. IW: What's your take on Avalon? BE: Microsoft's doing things that are valid according to their business interests and also, in general engineering terms, with an eye toward the [professional developers]. They have to keep them happy, give them the tools they want, keep them hooked on the next version of the OS. IW: Of course, a lot of those folks tell us that the browser and the Web are their bread and butter. BE: That's my fond hope, too. I'm sure there will always be certified Windows developers. But I do wonder if they'll have trouble convincing people to migrate and pay large costs to reinvest in redoing things - especially if they're not sup-porting the Web well and if people find the Web to be lower cost yet still adequate for presentation. "Avalon will be a lot easier to write than the previous ActiveX 1) • Brendan Eich, Mozilla Mdl: Avalon is a very extensive API, but while there is a lot of abstraction, there is not enough encapsulation. It's a high-level standard toolkit. The problem we have today with Unix toolkits, Mac OS toolkits, and Windows toolkits is that we are still using the same controls. Developers and designers are building applications in terms of the following items: scroll bars, enter lines, buttons, text entries, radio buttons, pop-up menus, combo boxes. Avalon is not pre-senting us with new controls or innovative ways of dealing with large volumes of data. And yet this massive API says you have to be completely bound to a particular version of the .Net Framework. This is not the approach the Web has taken, which is that a table or button can be rendered in different ways appropriate to the platform. BE: That's right. If you look at XAML's style language, they really muddle the presentation /structure separation. Mdl: Avalon is the next ActiveX. One thing that is a problem when trying to do Linux desktop rollouts is that companies often have a few proprietary ActiveX components. Avalon will be a lot easier to write than the previous ActiveX: it's a lot prettier, so when organizations are using Longhorn-based machines, which 1 assume will be sold everywhere by 2008, it's going to be increasingly hard for the rest of us to get there unless we have an implementation of an equivalent technology. So, eventually somebody will implement that, whether as part of the Mono project or a separate project. IW: It's been argued that because there are 15 ways people have approached XUL [Extensible User Interface Language], Flex, XAML, whatever - and you can't reconcile them - maybe it's time for a de facto standard implementation. BE: XAML is not that thing, though, because, as Miguel says, they've bound it too tightly to their class structure. And that surprises me because they should have institutional memory of all the versions of OLE and all the hell they had to go through in terms of compatibility glue. Do they want to do that again? - J.U. INFOWORLD.COM 07.19.04 41](https://substackcdn.com/image/fetch/$s_!ZgQc!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F925cf2a4-16cc-415e-9f95-426103fb54bc_1108x2102.jpeg)

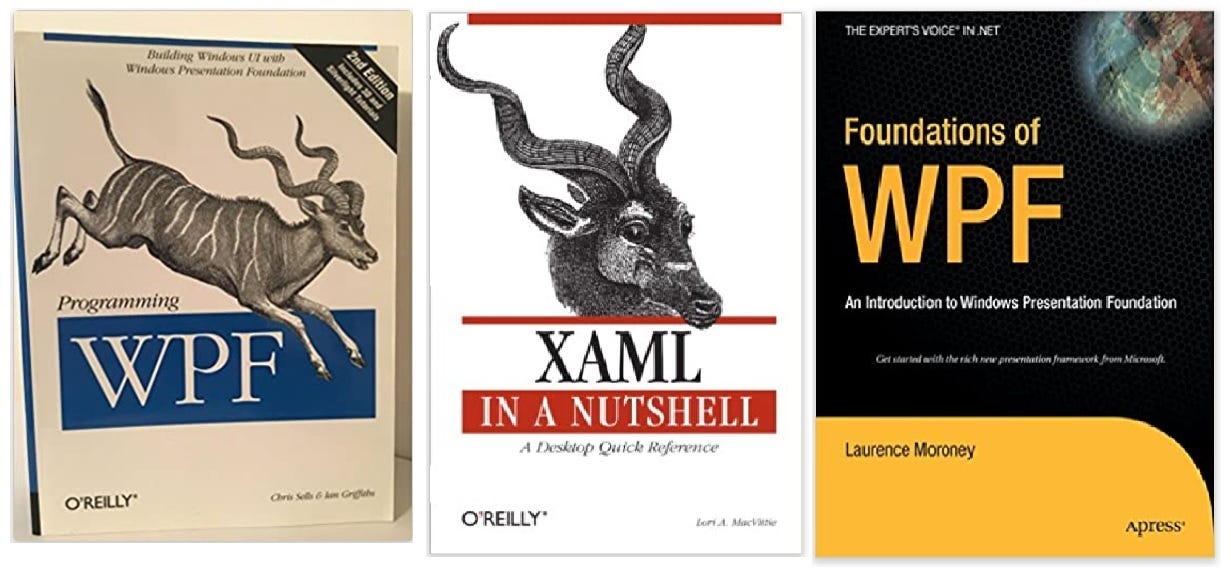

WPF had an all-expansive vision that included a unique language (known as XAML) and a set of libraries for building graphical and data-rich applications. Taken together and to their logical end point, the team spoke of Avalon as being a replacement for HTML in the browser and also .NET on the client. This was the reason work had stopped on Internet Explorer, as the overall Longhorn vision was to bring this new level of richness to browsing. From the outside (where I was in Office) it seemed outlandish, and many on the outside agreed particularly those driving browser standards forward. Still, this opened a second front in the race to improve Win32, in addition to .NET. All along WPF would claim allegiance and synergy with .NET but the connections were thin, especially as WPF split into a cross-platform, in-browser runtime and a framework largely overlapping with .NET. When I would reflect on WPF I would have flashbacks to my very first project, AFX, and how we had created a system that was perfectly consistent and well-architected yet unrelated to everything, in addition to being poorly executed.

But what should developers have used—classic Win32, or the new frameworks of .NET, WPF, or something else I just learned about called Jolt? These were big decisions for third-party developers because there was an enormous learning curve. They had to choose one path and go with it because the technologies all overlapped. That was why I often called these frameworks “fat frameworks,” because they were big, slow, and took up all the conceptual room. Importantly, the common thread among the frameworks was their lack of coherence with Win32—they were built on top of Win32, duplicating operating system capabilities. Fat was almost always an insult in software.

The approach taken meant the idea of both .NET on the client and WPF were built on the shakiest of foundations, and what we were seeing with .NET on the client was as predicted based on all past experiences. I had a very long and ongoing email thread (which I later turned into an Office Hours blog post) with the wonderfully kind head of Developers, S. Somasegar (Somase, aka Soma), on this topic where I pushed and pushed on the challenges of having fat frameworks emerge while we have a desire to keep Windows relevant. As I wrote the email, I was struck by how similar this experience was to the one I had twenty years earlier as we built, and then discarded, the fat framework called AFX. While history does not repeat itself, it does rhyme. Many would debate the details, but fundamentally the team took the opposite path that we did years earlier. I recognize writing this today that some are still in the polarized camp and even today remain committed to some of these technologies. One of the most successful tools Microsoft ever released was Visual Basic, which was an enormously productive combination of framework, programming language, and development tool. That was not what we had here.

Nothing with developers and Microsoft was ever simple. Microsoft’s success with developers was often quite messy on the ground.

Along with large product development teams, there was also a large evangelism team responsible for gaining support or traction of new technologies with developers. This team was one of the gems of Microsoft. It masterfully built a community around developer initiatives. It was largely responsible for taking the excellent work in .NET for Server and landing it with thousands of enterprise developers. In fact, part of that challenge was that the evangelism team had moved to the Developer division where the org chart spoke more loudly than the overall company mission and the priority and resourcing of evangelism tilted heavily toward .NET in all forms over the declining Win32 platform.

As a counterexample, the evangelism team seeing the incoherence in product execution provided significant impetus to force the Windows NT product to fully adopt the Windows API paving the way for Microsoft’s Win32 success. I previously shared my early days story as a member of the C++ team pointing out how Windows NT differed from classic Windows APIs in Chapter II, I Shipped, Therefore I am. Many of the lessons in how divergent the two Windows APIs were surfaced in the well-known Windows NT porting lab run by the evangelism team, where developers from around the world would camp out for a few weeks of technical support in moving applications to Windows NT or Windows 95, before both shipped.

Perhaps an organization-driven result was a reasonable tradeoff, but it was never explicit, as was often the case with cross-divisional goals. In many ways the challenges were accelerated by our own actions, but without ever making that a clear goal we would spend too much time in meetings dancing around the results we were seeing in the erosion of Win32.

The evangelism team didn’t ever fail at their mission and reliably located or created champions for new technologies. It seemed there were always some outside Microsoft looking to get in early on the next Microsoft technologies. The evangelism team was expert at finding and empowering those people. They could summon the forces of the book, consulting, and training worlds to produce volumes of materials—whole books and training courses on XAML were available even before Vista was broadly deployed. Although WPF had not shipped with any product, it had a strong group of followers who trusted Microsoft and made a major commitment to use WPF for their readers, clients, or customers (as authors, consultants, or enterprise developers). Before Vista shipped, WPF appeared to have initial traction and was a first-class effort, along with .NET on the client. WPF had an internal champion as well. The Zune team used early portions of WPF for software that accompanied their ill-fated, but well-executed, iPod competitor.

Things were less clear when it came to WPF and Vista. WPF code would ship with Vista, but late in the product cycle the shiproom command came down that no one should use WPF in building Vista because of the burden it would place on memory footprint and performance of applications. This caused all sorts of problems when word spread to evangelists (and their champions). People were always looking for signals that Microsoft had changed its mind on some technology. Seeing the risk to WPF by not being performant, the Avalon team (the team was also called Avalon) set out to shrink WPF and XAML into a much smaller runtime—something akin to a more focused and scenario specific product. This was a classic Windows “crisis” project and was added to the product plans sometime in 2005 or early 2006, called Jolt, while the rest of Longhorn was just trying to finish with quality.

Jolt was designed to package up as much of WPF as could fit in a single ActiveX control, also called WPF/E for WPF everywhere, and then later in final form called Silverlight. This would make it super easy to download and use. Streaming videos and small graphical games to be used inside of a browser became the focus. Sound like Adobe Flash? Yes, Jolt was being pitched internally as Microsoft’s answer to Adobe Flash. To compete effectively with Flash, Jolt would also aim to be available across operating systems and browsers—something that made it even less appealing to a Windows strategy, and more difficult to execute. I was of the view that Adobe Flash was on an unsustainable path simply because it was both (!) a fat framework and also an ActiveX control. By this time, ActiveX controls, which a few years earlier were Microsoft’s main browser extensibility strategy, had come to be viewed as entirely negative because they were not supported in other browsers and because they were used as malware by tricking people into running them. The technical press and haters loved to refer to ActiveX as CaptiveX. As an aside, one of my last projects working on C++ was to act overly strategic and push us to adopt the predecessor to ActiveX, known as OLE Controls, and implement those in our C++ library affording great, but useless, synergy with Visual Basic.

For me, this counted as two huge strikes against Jolt. Imagine a strategic project, at this stage in the history of the company, that came about from a crisis moment trying to find any code to ship while also using the one distribution method we had already condemned (for doing exactly the same thing previously.) I did not understand where it was heading.

Somehow, I was supposed to reconcile this collection of issues. When I met with the leaders of the team, they were exhausted though still proud of what they had accomplished. When I say exhausted, I mean physically drained. The struggle they had been through was apparent. Like many who had worked on the three pillars of Vista, the past few years had been enormously difficult. They wanted to salvage something of their hard work. I couldn’t blame them. At the same time, those weren’t the best reasons to ship code to hundreds of thousands of developers and millions of PCs without a long-term strategy for customers. My inclination was to gently shut down this work rather than support it forever knowing there was no roadmap that worked.

The team, however, had done what Windows teams did often—evangelized their work and gained commitments to foreclose any attempt to shut down the effort. With the help of the evangelism team, they had two big customers lined up in addition to the third parties that the evangelism group had secured. In addition to Zune, the reboot of the Windows Phone (which would become Windows Phone 7) would have a portions of the developer strategy based on Jolt—not the phone itself, but it would use Jolt as a way to make it easy for developers to build apps for the phone operating system (prior to this time, apps for the phone were built to basically use ancient Windows APIs that formed the original Windows CE operating system for phones). The Developer division wanted to bring WPF and Indigo into the .NET framework and create one all-encompassing mega-framework for developers but branded as the new version of .NET. The way the .NET framework generally addressed strategic issues was to release a new .NET that contained more stuff under the umbrella of a new version with many new features, even if those new features strategically overlapped with other portions of .NET.

Given all this, the choice was easy for me. As they requested, the Phone team and the Developer division took over responsibility for the Jolt and WPF teams, respectively. It was a no-brainer. Eventually the code shipped with Windows 7 as part of a new .NET framework, which planned anyway. Most everyone on the Windows team, particularly the performance team in COSD and the graphics team in WEX, were quite happy with all of this. The Windows team had always wanted to focus on Win32, even though there was little data to support such a strategy.

While this decision clarified the organization and responsibility, it in no way slowed the ongoing demise of Windows client programming nor did it present a coherent developer strategy for Microsoft. The .NET strategy remained split across WPF and the old .NET client solutions, neither of which had gained or were gaining significant traction—even with so much visible support marshalled by the evangelism team. Win32 had slowed to a crawl, and we saw little by way of new development. It was discouraging.

Again, many reading this today will say they were (or remain) actively developing on one or the other. My intent isn’t to denigrate any specific effort that might be exceedingly important to one developer or customer, but simply to say what we saw happening in total across the ecosystem as evidenced by the data we saw from the in-market telemetry. One of the most difficult challenges with a developer platform is that most developers make one bet on a technology and use it. They do not see histograms or pie charts of usage because they are 100% committed to the technology. They are also vocal and with good reason, their livelihoods depend on the ongoing health of a technology.

With everything to do with developers, APIs, runtimes, and the schism in place, the problem or perhaps solution was this was all “just code,” as we would say. What that means is twofold. First, there was always a way to demonstrate strategy or synergy. In a sense, this was what we’d disparagingly call stupid developer tricks. These were slides, demonstrations, or strategic assertions that showed technical relationships between independently developed, somewhat-overlapping, and often intentionally different technologies. The idea was to prove that the old and new, two competing new, or two only thematically connected technologies were indeed strategically related. My project to support OLE Controls in C++ was such a trick.

Technically these tricks were the ability to switch languages, use two strategies at the same time, or tap into a Win32 feature from within some portion of something called .NET. A classic example of this was during the discussion about where Jolt should reside organizationally. It was pointed out that Jolt had no support for pen computing or subsequently touch (among other things) since there was none in .NET or WPF to begin with. These were both key to Windows 7 planning efforts. Very quickly the team was able to demonstrate that it was entirely possible to directly call Win32 APIs from within Jolt. This was rather tautological, but also would undermine the cross-platform value proposition of Jolt and importantly lacked tools and infrastructure support from Jolt.

Second, this was all “just code,” which meant at any time we could change something and maybe clean up edge cases or enable a new developer trick. Fundamentally, there was no escaping that Win32, .NET, WPF, and even Jolt were designed separately and for different uses with little true architectural synergy. Even to this day, people debate these as developers do—this is simply a Microsoft-only version of what goes on across the internet when people debate languages and runtimes. Enterprise customers expect more synergy, alignment, and execution from a single company. More importantly, developers making a bet on the Microsoft platform expect their choices to be respected, maintained, and enhanced over time. It is essentially impossible to do that when starting from a base as described, and as Microsoft amassed from 2000 to 2007.

As simple as it was to execute moving these teams, in many ways it represented a failure on my part, or, more correctly, a capitulation. I often ask myself if it would have been better to wind down the efforts rather than allow the problem to move to another part of the company. I was anxious to focus and move on, but it is not clear that was the best strategy when given an opportunity to bring change and clarity when developers so clearly were asking for it, even if it meant short-term pain and difficulty. It would have been brutal.

It grew increasingly clear that there were no developers (in any significant number, not literally zero) building new applications for Windows, not in .NET or WPF or Win32. The flagship applications for Windows, like Word, Excel, and others from Microsoft along with Adobe Photoshop or Autodesk AutoCAD, were all massive businesses, but legacy code. The thousands of IT applications connecting to corporate systems, written in primarily Visual Basic, all continued daily use but were being phased out in favor of browser-based solutions for easier deployment, management, portability, and maintenance. They were not using new Windows features, if any existed. The most active area for Windows development was gaming, and those APIs were yet another layer in Windows, called DirectX, which was part of WEX and probably the most robust and interesting part of Win32. Ironically, WPF was also an abstraction on top of those APIs. ISVs weren’t using anything new from Microsoft, so it wasn’t as though we had a winning strategy to pick from.

![From Wired Magazine:Anderson: A quote of yours that I've always loved is that Netscape would render Windows "a poorly debugged set of device drivers." Andreessen: In fairness, you have to give credit for that quote to Bob Metcalfe, the 3Com founder. Anderson: Oh, it wasn't you? It's always attributed to you. Andreessen: I used to say it, but it was a retweet on my part. [Laughs.] But yes, the idea we had then, which seems obvious today, was to lift the computing off of each user's device and perform it in the network instead. It's something I think is inherent in the technology-what some thinkers refer to as the "technological imperative." It's as if the technology wants it to happen From Wired Magazine:Anderson: A quote of yours that I've always loved is that Netscape would render Windows "a poorly debugged set of device drivers." Andreessen: In fairness, you have to give credit for that quote to Bob Metcalfe, the 3Com founder. Anderson: Oh, it wasn't you? It's always attributed to you. Andreessen: I used to say it, but it was a retweet on my part. [Laughs.] But yes, the idea we had then, which seems obvious today, was to lift the computing off of each user's device and perform it in the network instead. It's something I think is inherent in the technology-what some thinkers refer to as the "technological imperative." It's as if the technology wants it to happen](https://substackcdn.com/image/fetch/$s_!qsBw!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F772f77ed-ab73-41c8-9235-fddac3344e4c_1582x856.png)

Further evidence of the demise of Win32 arose as early as 1996 from Oracle’s Larry Ellison, who put forth the idea of a new type of browser-only computer, the Network Computer. (Sound like a Chromebook?) At the time, Marc Andreessen famously said that Windows would be become, simply, a “poorly debugged set of device drivers,” meaning that the only thing people would care about would be running the browser. Years later, Andreessen would point out the original was based on something Bob Metcalf had said.2 Eight years later we had reached the point where the only new interesting mainstream Windows programs were browsers, and at this time only Firefox was iterating on Windows and the only interesting thing about Firefox was what it did with HTML, not Windows. The device drivers still had problems in Vista. In fact, that was the root of the Vista Capable lawsuit!

Moving WPF and Jolt to their respective teams, while an admission of defeat on my part, could best be characterized as a pocket veto. These were not the future of Windows development, but I wasn’t sure what would be. We in Windows were doubling down on the browser for sure, but not as leaders, rather as followers. We had our hands full trying to debug the device drivers.

XAML development continues today, though in a much different form. While it does not make a showing in the widely respected Stack Overflow developer survey3 of over 80,000 developers in 181 countries, it maintains a spot in the Microsoft toolkit. XAML will come to play an important role in the development of the next Windows release as well.

With the team on firm(er) ground and now moving forward we finally started to feel as though we had gained some control. By September 2007 we were in beta test with the first service pack for Vista, which OEMs and end-users anxiously awaited.

The team was in full execution mode now and we had milestones to plow through. While I felt we were heading in the right direction and cleared the decks of obvious roadblocks, there was a looming problem again from Cupertino. What was once a side bet for Microsoft would prove to be the most transformative invention of the 21st century…from Apple.

On to 092. Platform Disruption…While Building Windows 7 [Ch. XIII]

https://news.microsoft.com/2008/07/14/microsoft-and-netflix-unveil-partnership-to-instantly-stream-movies-and-tv-episodes-to-the-tv-via-xbox-live/

https://www.wired.com/2012/04/ff-andreessen/

https://insights.stackoverflow.com/survey/2021#technology-most-popular-technologies

Hi Steven, what a mess you inherited, very impressed on how you handled it. Another great chapter.