028. Pivotal Offsite

“The Internet can best be described as a ‘mania’.” —Bill Gates introduction at the Internet Offsite, April 5, 1994.

There was no shortage of energy around the internet. It was clear that a bunch of stuff would happen. Turning that energy into something resembling a strategy was an open question. For all the excitement, each group seemed to have its own way of defining the Internet, or its own view of how it could subsume the Internet into existing products. Convening an offsite of the 20 or so most senior product leaders in the company was a big deal. All I could really do was create an opportunity for leaders to lead and strategy to emerge. The rest was up to BillG and those leaders. JAllard and I brought the enthusiasm and hopefully a spark. It was not going to be easy.

Back to 027. Internet Evangelist

Microsoft loved a good offsite. We loved a chance to “wallow” in the minutiae of technologies, implementation, and competitors. We also enjoyed tearing apart ideas and approaches with our proverbial tech buzzsaw. In setting up the offsite I had no idea how critical it would become.

BillG famously tilted (or pivoted) the company away from character-based MS-DOS products to graphical user interface products in a retreat just a decade earlier. Platform shifts in technology seem to come in these decade waves (though perhaps that is a retroactive timeline). Was the Internet the next platform shift, even though GUI had just started? Was this offsite going to be as pivotal to Microsoft’s future as when the bet was made on making Excel for Macintosh? I certainly hoped for that, but had no idea how the company’s leaders would see things when they were all assembled to discuss it. I thought about that as I remembered DougK, the inventor of minimal recalculation in spreadsheets, telling me the story of leaving Microsoft after that offsite because he disagreed with the new direction.

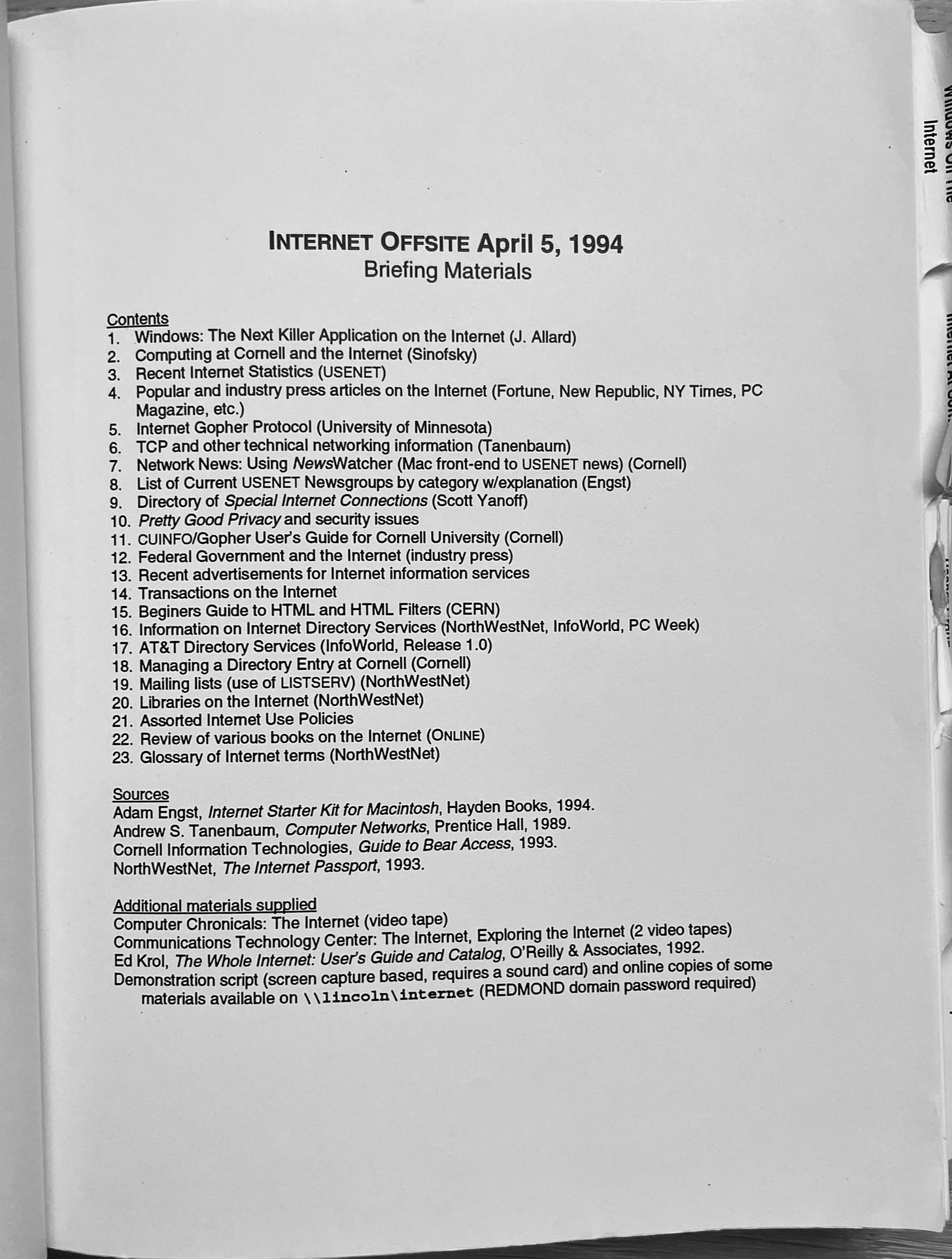

Scheduled for April 5, 1994 (coincidentally the day after the incorporation of Mosaic Communications Corporation—later to be renamed Netscape Corporation—created by legendary founder of SGI, Jim Clark, and original Mosaic programmer Marc Andreessen), I prepared the mother of all briefing books for the offsite. No offsite was complete without an elaborate briefing book. I hand carried the entire thing to the copy center (MSCOPY) and ordered 30 copies, doubled-sided, bound, with tabs. They called me an hour later and told me I needed two volumes, so I headed back and removed enough pages to keep it at the 300-page limit.

Looking at the book now, it serves as a great reminder of just how small the whole of the internet was back then. One of the books I ordered for many people was by Ed Krol, The Whole Internet: User’s Guide and Catalog. How crazy to think that the entirety of the Internet could be represented as a book and cataloged, but that was sort of what it was. Similarly, the technology underpinnings were perhaps 100 pages of protocols and formats that everyone at the offsite could easily absorb.

Episode of Computer Chronicles from 1993, hosted by Stewart Cheifet. (Source: https://archive.org/details/computerchronicles)

One of the most popular prep materials was a copy of a video tape episode of The Computer Chronicles, the award winning Public Television show hosted by Stewart Cheifet from 1983-2002. The video was essentially the entire briefing book in a one hour television segment. It is a remarkable time capsule of the 1993 Internet. It was already a bit out of date by the time of the offsite but it was easily absorbed, especially for those who did not come by my office for a demo.

Perhaps I got a little carried away.

About 20 people gathered at Shumway Mansion in Kirkland about 8AM, early for developers. In his introduction without any slides, Bill improvised the term “mania” to describe the internet and emphasized a core company value, which was that exponential phenomena cannot be ignored. The internet was exponential. He said something that I thought was critically important and returned to time and time again over the years that followed. He told us the internet was not to be “studied.” It was already decided that it would be a critical part of our next wave of products. We were kicking off the process to decide what to do, not if we should do anything. His choice of words and body language was as strong as his email a few years ago declaring Windows our strategy.

![Memo To: Bill Gates, Brad Silverberg, Craig Mundie, Dave Leinweber, Dave Macdonald, Bob Frankston, Jabe Blumenthal, James 'J' Allard, Jim Allchin, John Ludwig, Nathan Myhrvold, Pat Bellamah, Peter Neupert, Peter Pathe, Rob Shurtleff, Ron Souza, Russell Siegelman, Steven Sinofsky, Steve Wilson, Tom Evslin From: Steven Sinofsky Subject: Internet Offsite: Group Presentations Date: April 15, 1994 cc: Mike Maples, Steve Ballmer, Paul Maritz, Roger Heinen, Pete Higgins, Deborah Willingham Introduction A one day off-site was held on April 6, with the goal educating, evangelizing, and strategizing about the internet phenomenon within the key organizations at Microsoft. The attendees were given background materials and most saw live demonstrations. The retreat started with a brief introduction by BillG, after which he introduced the discussion topics for the breakout groups. There were three breakout groups, Systems, Tools and Services, and On-line strategy. Each group was given approximately 4 hours to prepare a presentation addressing the issues raised by BillG. Each group was then given one hour to present the results. The notes below are taken from these group presentations. The following are the notes from the retreat. The recommendations are still preliminary. BillG will be following up with some priorities and responsibilities. BillG Overview Embrace and Extend are the two key words for how Microsoft will inter-operate with the internet. This retreat is about how Microsoft will embrace the current internet mania and extend Internet Protocols, Applications, and Tools. We also need to look at how we can leverage the internet to improve our support and services available to customers. At each step of this strategy we need to be aware of the business implications of what we are doin. For example we should be sure to be getting credit for the good work that we do. The use of the term mania best describes what is currently going on regarding the internet. Everywhere I [BillG] go people ask me about how Microsoft will be on the internet. People want to know when we will provide support services on the internet, they want to know when we will release internet software such as Mosaic, and they want to know how our future information products (and our information at your fingertips vision) relate to the internet. Many people feel that the internet is the real-live digital highway. In many ways, what we are seeing on the internet is a narrow band version of the highway. The rate of growth in internet use, servers, and clients is amazing. Perhaps it is greater than any growth we will ever see for any industry. Very few things grow exponentially as the internet is clearly doing today. Memo To: Bill Gates, Brad Silverberg, Craig Mundie, Dave Leinweber, Dave Macdonald, Bob Frankston, Jabe Blumenthal, James 'J' Allard, Jim Allchin, John Ludwig, Nathan Myhrvold, Pat Bellamah, Peter Neupert, Peter Pathe, Rob Shurtleff, Ron Souza, Russell Siegelman, Steven Sinofsky, Steve Wilson, Tom Evslin From: Steven Sinofsky Subject: Internet Offsite: Group Presentations Date: April 15, 1994 cc: Mike Maples, Steve Ballmer, Paul Maritz, Roger Heinen, Pete Higgins, Deborah Willingham Introduction A one day off-site was held on April 6, with the goal educating, evangelizing, and strategizing about the internet phenomenon within the key organizations at Microsoft. The attendees were given background materials and most saw live demonstrations. The retreat started with a brief introduction by BillG, after which he introduced the discussion topics for the breakout groups. There were three breakout groups, Systems, Tools and Services, and On-line strategy. Each group was given approximately 4 hours to prepare a presentation addressing the issues raised by BillG. Each group was then given one hour to present the results. The notes below are taken from these group presentations. The following are the notes from the retreat. The recommendations are still preliminary. BillG will be following up with some priorities and responsibilities. BillG Overview Embrace and Extend are the two key words for how Microsoft will inter-operate with the internet. This retreat is about how Microsoft will embrace the current internet mania and extend Internet Protocols, Applications, and Tools. We also need to look at how we can leverage the internet to improve our support and services available to customers. At each step of this strategy we need to be aware of the business implications of what we are doin. For example we should be sure to be getting credit for the good work that we do. The use of the term mania best describes what is currently going on regarding the internet. Everywhere I [BillG] go people ask me about how Microsoft will be on the internet. People want to know when we will provide support services on the internet, they want to know when we will release internet software such as Mosaic, and they want to know how our future information products (and our information at your fingertips vision) relate to the internet. Many people feel that the internet is the real-live digital highway. In many ways, what we are seeing on the internet is a narrow band version of the highway. The rate of growth in internet use, servers, and clients is amazing. Perhaps it is greater than any growth we will ever see for any industry. Very few things grow exponentially as the internet is clearly doing today.](https://substackcdn.com/image/fetch/$s_!ZhiD!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fdd7bb6bb-bce5-4ed9-94da-015cc5ec644a_1062x1072.png)

In order to develop a plan, we divided into three groups, each given a set of questions:

Systems. How do we make Microsoft platforms the preferred choice for internet as both a client and server? How do we make internet applications available given that most everything is free? What is the internet experience missing that we could provide? How does Cairo/EMS (the next, next generation OS and the new mail server, both very early in development) complement or conflict with the above?

Tools and Services. Can Microsoft use the internet for customer support? How do we connect with developers using the internet? If we use the internet for support will we get credit for providing better support compared to what we do on CompuServe? How do our existing tools such as WinHelp and Word relate to/benefit from/compete with internet formats? Where do the new tools being developed for Marvel (notably a tool known as Blackbird) fit in?

Online Strategy. Should our online service Marvel embrace the internet? How do we make our clients the best internet clients? What value do we bring to the internet community?

The natural reaction to such a situation at Microsoft was not to push back because of schedules or capacity, but rather to go after the other side on technical grounds. The technique of arguing against a new technology (competitive product and alternative architecture, for example), not on the basis of one’s own constraints but on the lack of merits of another approach. That was known as applying the technology buzzsaw. The basic goal was to find all the flaws on the other side to avoid admitting lacking the engineering agility to get it done.

As an example, with respect to the HTML format, there were two schools of thought. Blackbird was chartered to create a high-end authoring tool to enable content creators to make rich, interactive content for the Marvel network, like our CD-ROM titles. It cast a very long shadow and was a widely feared (and misunderstood) product, even without ever shipping. In a relative sense, HTML was a trivial subset of what “Hollywood” or magazines needed to bring their brands to the WWW. Marvel was embracing that class of content owner as a core potential partner, so HTML was broadly deficient. At the same time, a divergent view came from the Word team that embraced being able to edit HTML from within Word—Word routinely dealt with formats with lower fidelity, so it seemed perfectly fine to think of HTML as a supported format. In fact, HTML was even a subset of a just released add-on for Word called SGML Author (SGML was a mega-standard upon which HTML was loosely based).1

Connectivity to AOL and CompuServe used the X.25 telecom standard—that is, connectivity provided by analog, dial-up phone lines—so ubiquitous and reliable that it was a stronghold for the telecom companies. The idea that consumers had access to the internet outside of that network or even that the packet-switched network (TCP/IP) would mature to be reliable and widely available seemed crazy. Others, seeing the exponential growth in internet users connecting with local connectivity providers using new packet-switched protocols, believed it was investing in legacy to even consider worrying about old-style connectivity and partners.

This led to a good debate over how and if Marvel should be focused purely on internet protocols for the service or not.

There was also an interesting conversation taking place surrounding various new projects intersecting, with no real way to reconcile the overlap between them. The relationship between new mail service EMS and the new online service Marvel was one example, and a topic that continued to smolder. Marvel, competing with AOL, would clearly have email and discussion boards. Marvel was already working to understand a potential relationship to USENET (and the NNTP protocol it used). EMS was an enterprise mail service just starting to be able to handle email for a few people at Microsoft. A big and differentiating feature of EMS was going to be Public Folders, or essentially shared email boxes that looked a lot like the USENET experience, and, like Marvel, EMS was also trying to figure out the relationship of its feature to USENET. The EMS design point was enterprise IT and a highly managed environment for intense email usage in the workplace, not the mass-scale lightweight consumer mail Marvel envisioned. Some things took decades to resolve and the email strategy was one of them. Blackbird, Marvel, and EMS, overlapped with each other and also with the internet. It was both stunning and kind of ridiculous. These products didn’t yet exist, and the internet did. It is impossible to catch up to something growing exponentially. That doesn’t stop debates at a big company, though, as I was learning.

Considering the Internet within the halls of Microsoft and for most attendees was months old, there was a broad consensus that change was in the air. The closer a group’s products were to the internet the more the discussion was about schedules and constraints. The further away from shipping a team was, the more the internet seemed like a great idea. That’s the opposite of what we needed, though.

The critical exception to this observation was Systems, as the first team to use the day to validate and expand plans already in place and to express a strategy. Systems intended to ensure both OS projects underway were the best client and server for the internet. The details mattered, though.

At the base level the forethought from the networking group on NT Daytona, the code name of the next release of Windows NT, was paying off. They were well down the path of implementing the required networking infrastructure. These were the essential ingredients to “get on the internet” with a Daytona computer. There were many implementation challenges to reuse this work on Chicago, which was still debating how fully 32-bit the operating system was, and also how much low-level compatibility existed between Chicago and Daytona for code like networking drivers.

The group concluded that implementing applications that made the internet interesting was critical. Those responsible decided building news, mail, Gopher, and a WWW browser, were goals.

In early 1994, the internet was not just the WWW. The internet was made up of many different services, each a combination of server code, client code, and then ultimately one or more viewers. For example, Gopher had a server that maintained the hierarchy for the site, a Gopher client that navigated that site, and then any number of viewers that could be launched to view the “leaf” of the Gopher tree. For example, there might be a Gopher site that eventually led to photos, or a bunch of Word documents, which launched an image editor, or Word to view them. The WWW had rich text, links, and images all in one “viewer,” and a simple server setup. But as I was showing off in my demos, many WWW sites were simply navigations to content that the browsers did not understand, such as music or video files.

As the debate and discussion of solutions continued, my feeling at the time was there was a lot of wheel spinning considering the galactic shift that the internet appeared to be. Perhaps because I was relatively early to the space, I had become a zealot? Or maybe I was so down on such inward-facing debates given what I had seen firsthand. It was a challenge to relay the experience I had on Cornell’s campus to teams—I sounded like a crazy person, like a junior person back from his or her first customer visit or conference. I sounded like I sounded when I came back from USENIX with a changed view on C++, though that worked out pretty well.

Looking back, by the end of the offsite, some converted to internet zealots. In many ways the zealots, myself included, left that day with the feeling that Marvel was going to either happen or it wasn’t, but that there wasn’t much that could really be done since it seemed so different than the direction we should have been going in. Marvel felt like taillights, competing from behind and not vision-setting. It is certainly easier to say that today seeing where things went.

One of the most difficult challenges to understand, until you have lived through it, is the pressure to keep moving forward even in the face of disruption. The biggest lesson I learned in just the short time between getting trapped in the snow and this first week of April 1994 was just how much of what happens in a company is a result of the momentum of a product (or technology) and the structure of the organization in place. Make no mistake, as a manager I would have my very own challenges in this regard even though I lived through this very experience.

The offsite did not come to a dramatic end with the key developer quitting as the bet on graphical interface, but it was an incredible day. There’s no doubt it was very important to urgently bring everyone together and for Bill to make it abundantly clear just how much we were betting on the Internet, and he did so without hesitation. Many would look to the Internet Tidal Wave memo years later as the clarion call when in every respect this was the pivotal day in the journey to an internet-centric company.

Great chapter.