228. DeepSeek Has Been Inevitable and Here's Why (History Tells Us)

DeepSeek was certain to happen. The only unknown was who was going to do it. The choices were a startup or someone outside the current center of US AI leadership and innovation.

TL;DR for this article: DeepSeek was always going to happen. We just didn’t know who would do it. It was either going to be a startup or someone outside the current center of leadership and innovation in AI, which is mostly clustered around trillion-dollar companies in the US. It turned out to be a group in China, which for many—myself included—is unfortunate.

But again, it absolutely was going to happen. The next question is US technologists recognize DeepSeek for what it is?

There’s omething we used to banter about when things seemed really bleak at Microsoft: When normal companies scope out features and architecture they use t-shirt—sizes small, medium, and large. At its peak, Microsoft seems capable of only thinking in terms of extra-large, huge, and ginormous. That’s where we are with AI today and the big company approach in the US.

There's more in The Short Case for Nvidia Stock which is very good but focuses on picking stocks, which isn't my thing. Strategy and execution are more me so here's that perspective.

The current trajectory of AI if you read the news in the US is one of MASSIVE capital expenditures (CapEx) piled on top of even more MASSIVE CapEx. It’s a race between Google, Meta, OpenAI/Microsoft, xAI, and to a lesser extent a few other super well-funded startups like Perplexity and Anthropic. All of these together are taking the same approach which I will call “scale up”. Scale up is what you do when you have access to vast resources as all of these companies do.

The history of computing is one of innovation followed by scale up which is then broken by a model that “scales out”—when a bigger and faster approach is replaced by a smaller and more numerous approaches.

Mainframe→Mini→Micro→Mobile

Big iron→Distributed computing→Internet

Cray→HPC→Intel/CISC→ARM/RISC

OS/360→VMS→Unix→Windows NT→Linux, and on and on.

You can see this pattern play out at these macro level throughout the history of technology—or you can see it at the micro level with subsystems from networking to storage to memory.

The past five years of AI brought us bigger models, more data, more compute, and so on. Why? Because I would argue the innovation was driven by the cloud hyperscalers, whose approach was destined to do more of what they had already done. They viewed data for training and huge models as their way of winning and their unique architectural approach. The fact that other startups took a similar approach is just Silicon Valley at work—people move and optimize for different things at a micro scale without considering the larger picture. (See the sociological and epidemiological term small area variation.) People try to do what they couldn’t do in their previous efforts, or what their previous efforts might have overlooked.

The degree to which the hyperscalers believed in “scale up” is obvious when consider the fact that they are all building their own Silicon or custom AI chips. As cool as this sounds, it has historically proven very very difficult for software companies to build their own silicon. While many look at Apple as a success, Apple’s lessons emerged over decades of not succeeding PLUS they build devices not just silicon. Apple learned from 68k, PPC, and Intel—the previous architectures Apple used before transitioning to its own custom ARM chips—how to optimize a design for their use-cases. Those building AI hardware were solving their in-house scale up challenges—and I would have always argued they could gain percentages at a constant factor, but not anything beyond that.

Nvidia is there to help everyone not building their own silicon and those who want to build their own silicon but are also trying to meet their immediate needs. As described in “The Short Case” Nvidia also has a huge software ecosystem advantage with their CUDA platform, something they have honed for almost two decades. It is critically important to have an ecosystem, and they have been successful at building one. This is why I wrote and thought the Nvidia DIGITS project is far more interesting than simply a 4000 TOPS (tera operations per second) desktop (see my CES report).

So now where are we? Well, the big problem we have is that the large-scale solutions, regardless of all the progress, are consuming too much capital. But beyond that the delivery to customers has been on an unsustainable path. It’s a path that works against the history of computing, which shows us that resources need to become less—not more—expensive. The market for computing simply doesn’t accept solutions that cost more, especially consumption-based pricing. We’ve seen Microsoft and Google do a bit of resetting with respect to pricing in a move to turn their massive CapEx efforts into direct revenue. I wrote at the time of the initial pricing announcements that there was no way that would be sustainable. It took about a year. Laudable goal for sure but just not how business customers of computing work. At the same time, Apple is focused on the “mostly free” way of doing AI, but the results are at best mixed, and they’re still deploying a ton of CapEx.

Given that it was inevitable someone was going to look at what was going on and build a “scale out” solution—one that does not require massive CapEx and architectural approaches that use even less CapEx to build and train the product.

The example that keeps running through my mind is how AT&T looked at the internet. In all the meetings Microsoft had with AT&T decades ago about building the “information superhighway,” they were completely convinced of two things. First, the internet technologies being shown were toys—they were missing all the key features such as being connection based or having QoS (quality of service). For more on toys, see “[...] Is a Toy” by me.

Second, they were convinced that the right way to build the internet was to take their phone network and scale it up. Add more hardware and more protocols and a lot more wires and equipment to deliver on reliability, QoS, and so on. They weren’t alone. Europe was busy building out internet connectivity with ISDN over their telecommunications networks. AT&T loved this because it took huge capital and relied on their existing infrastructure.

They were completely wrong. Cisco came along and delivered all those things on an IP-based network using toy software like DNS. Other toys like HTTP and HTML layered on top. Then came Apache, Linux, and a lot of browsers. Not only did the initial infrastructure prove to be the least interesting part, but it was also drawn into a “scale out” approach by a completely different player, one who had previously focused on weird university computing infrastructure. Cisco did not have tens of billions of dollars nor did Netscape nor did CERN. They used what they could to deliver the information superhighway. The rest is history.

As an example, there was a time when IBM measured the mainframe business by MIPS (millions of instructions per second). The reality was they had 90 percent plus share of MIPS. But in practice they were selling or leasing MIPS (not the chip company from Stanford) at ever decreasing prices, just like Intel sold transistors for less over time. This is all great until you can get MIPS for even less money elsewhere. which Intel soon delivered. Then ARM found an even cheaper way to deliver more. You get the picture. Repeat this for data storage and you have a great chapter from Clay Christensen’s Innovator’s Dilemma.

Another challenge for the current AI hyperscalers is that they have only two models for bringing an exciting—even disruptive—technology to market.

First, they can bundle the technology as part of what they already sell. This de-monetizes anyone trying to compete with you. Of course, regulators love to think of this as predatory pricing, but the problem is software has little marginal cost (uh oh) and the whole industry is made up of cycles of platforms absorbing more technology from others. It is both an uphill battle for big companies to try to sell separate things (the salespeople are busy selling the big thing) and an uphill battle to try to keep things separate since someone is always going to eventually integrate them anyway. Windows did this with Internet Explorer. Word did this with Excel or Excel did this with Word depending on your point of view (See Hardcore Software for the details). The list is literally endless. It happens so often in the Apple ecosystem that it has a name and is called Sherlocking. The result effectively commoditizes a technology while maintaining a hold on distribution.

Second, AI hyperscalers can compete by skipping the de-monetization step and going straight to commoditization. This approach is one that counts on the internet and gets distribution via the internet. Nearly everything running in the cloud today is built on this approach. It really starts with Linux but goes through everything from Apache to Git to Spark. The key with this approach, and what is so unique about it, is open source.

Meta has done a fantastic job at being open source but it’s still relying on an architectural model that consumes tens of billions of dollars in CapEx. Meta, much like Google, could also justify CapEx by building tools that their existing products better; open-source Llama is just a side effect that is good for everyone. That is not unlike Google releasing all sorts of software, from Chromium to Android. It’s also what Google has did to de-monetize Microsoft when they began Gmail, ChromeOS, and it’s suite of productivity tools (Google Docs was originally just free, presumably to de-monetize Office). Google can do this because they monetize software with services on top of what they do with the open source they release. Their magic lies in the fact that their value-add on top of open source is not open source per se, rather it’s their hyperscale data centers running their proprietary code using their proprietary data. By releasing all their products as open source they are essentially trying to commoditize AI. The challenge, however, is the cost. This is what happened with Hotmail, for example—turns out that massive scale, even a 5MB free mailbox adds up to a lot of subsidies.

That’s why we see all the early AI hyperscaler products take one of two approaches: bundling or mostly open source. Those outside the two models are in a sense competing against bundles and against the companies trying to de-monetize the bundles. Those outside are caught in the middle.

The cost of AI, like the cost of mainframe computing to X.25 connectivity (the early pre-TCP/IP protocol for connecting computers over phone lines), literally forces the market to develop an alternative that scales without the massive direct capital.

By all accounts the latest approach with DeepSeek seems to be that. The internet is filled analysts trying to figure out just how much cheaper, how much less data, or how many fewer people were involved. In algorithmic complexity terms, these are all constant factor differences. The fact that DeepSeek runs on commodity, disconnected hardware and is open source is enough of a shot across the bow of the approach to AI hyperscaling that it can be seen as “the way things will go”.

I admit this is all confirmation bias for me. We’ve had a week with DeepSeek, and people are still poring over it. The hyperscalers and Nvidia have massive technology roadmaps. I’m not here for stock predictions at all. All I know for sure is that if history offers any advice to technologists, it’s that core technologies become free / commodities and because of internet distribution and de facto market standardization at many layers that happens sooner with every turn of the crank.

China faced an AI situation not unlike Cisco. Many (including “The Short Case”) are looking at the Nvidia embargo as a driver. The details don’t really matter. They just had different constraints. They had many more engineers to attack the problem than they had data centers to train. They were inevitably going to create a different kind of solution. In fact, I am certain someone somewhere would have. It’s just that, especially in hindsight, China was especially well-positioned.

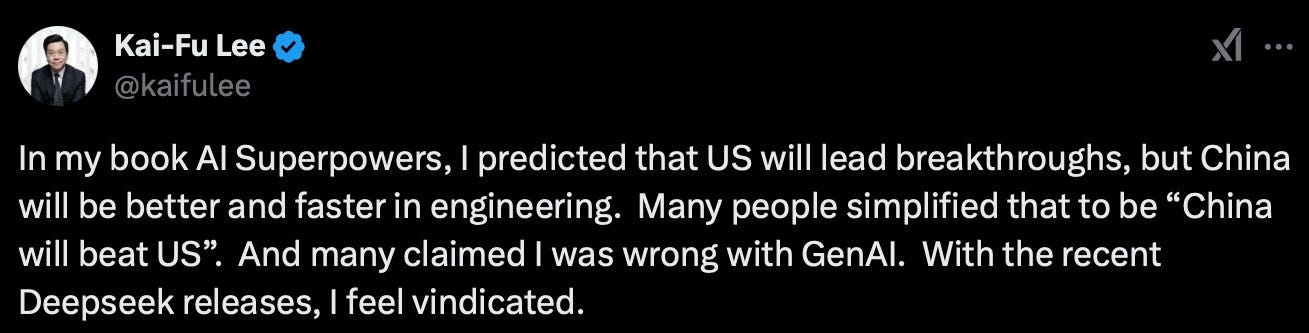

Kai-Fu Lee recently argued DeepSeek proved that China was destined to out-engineer the US. Nonsense I say. That’s just trash talk. China took an obvious and clever approach that US companies were mostly blind to because of the path that got them to where they are today before AI. DeepSeek is just a wakeup call.

I’m confident many in the US will identify the necessary course corrections. The next Cisco for AI is waiting to be created, I’m sure. If that doesn’t happen then it could also be like browsers ended up which is a big company (or three) will just bundle it for everyone to use. Either way, the commoditization step is upon us.

Get building. Scale out, not up. 🚀

—Steven Sinofsky

Check out Bittensor.com. Steve, you just wrote another justification for their decntralized approach. Great article!

I loved this piece. Thank you, Steve, for writing it.

"the commoditization step is upon is." » should be upon Us, right? - courtesy of how my brain is unfortunately wired.