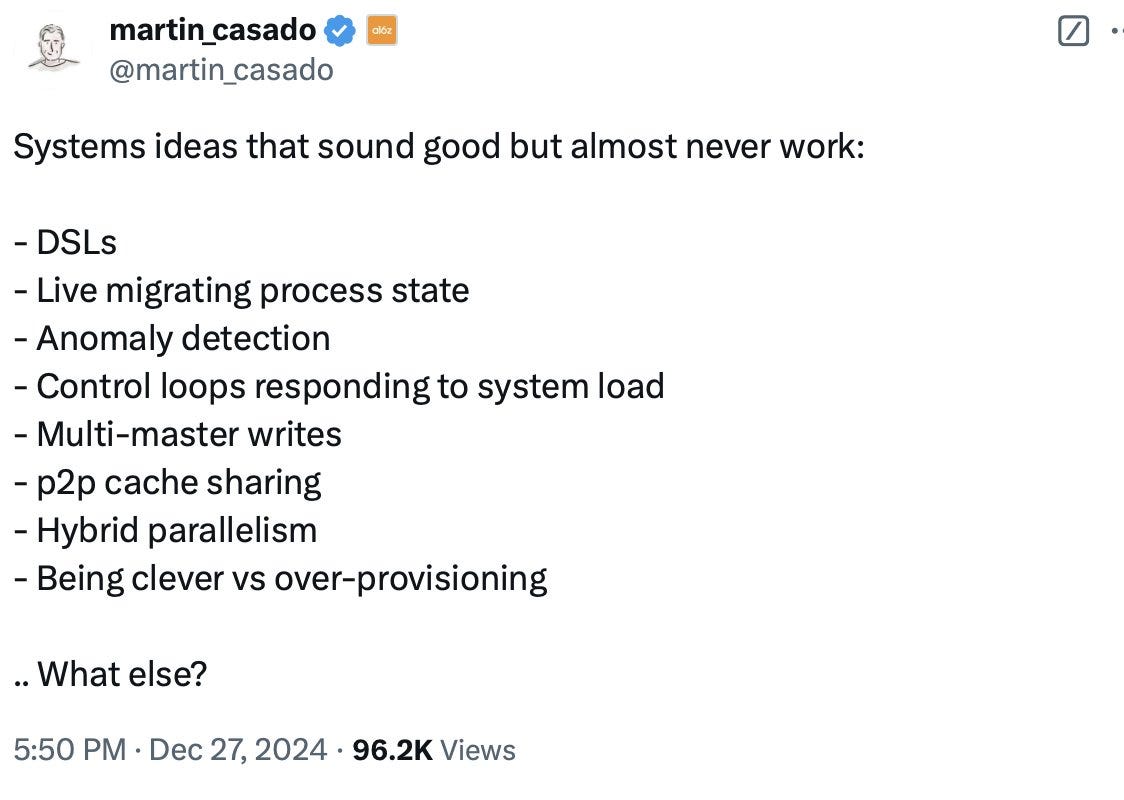

225. Systems Ideas that Sound Good But Almost Never Work—"Let's just…"

Some engineering patterns that sound good but almost never work as intended

@Martin_Casado tweeted some wisdom (as he often does) in this:

He asked what else and I replied with a quick list. Below is “why” these don’t work. I offer this recognizing engineering is also a social science and what works/does not work is context dependent. One life lesson is that every time you say to an engineer (or post on X) that something won’t work it quickly becomes a challenge to prove otherwise. That’s why most of engineering management (and software architecture) is a combination of “rules of thumb” and lessons learned the hard way.

I started my list with “let’s just” because 9 out of 10 times when someone says “let’s just” what follows is going to be ultimately way more complicated than anyone in the room thought it would be. I’m going to say “9 out of 10 times” a lot below on purpose because…experience. I offer an example of two below but for each there are probably a half dozen I lived through.

So why do these below “almost never work”?

Let's just make it pluggable. When you are pretty sure one implementation won’t work you think “I know, we’ll let developers or maybe others down the road use the same architecture and just slot in a new implementation". Then everyone calling the APIs magically get some improvement or new capability without changing anything. There’s an old saying “the API is the behavior not the header file/documentation”. Almost nothing is pluggable to the degree that it “just works”. The most pluggable components of modern software are probably device drivers, which enabled the modern computer but worked so poorly they are either no longer allowed or modern OSs have been building their own for a decade. The only way something is truly pluggable is if a second implementation is designed at the exact same time as the primary implementation. Then at least you have a proof it can work…one time.

Let's just add an API. Countless products/companies have sprinted to some level of success and then decided “we need to be a platform and have developers” and soon enough there is an API. The problem with offering an API is multidimensional. First, being an API provider is itself a whole mindset and skill where you constantly trade compatibility and interoperability for features, where you are constrained in how things change because of legacy behavior or performance characteristics, and you can basically never move the cheese around. More importantly, an offering an API doesn’t mean anyone wants to use it. Almost every new API comes up because the co/product wants features, but it doesn’t want to prioritize them enough (too small a market, too vertical, too domain specific, etc.) and the theory is the API will be “evangelized” to some partner in the space. Turns out those people are not sitting around waiting to fill in holes in your product. They have a business and customers too who don’t want to buy into yet another product to solve their problem. Having an API—being a platform—is a serious business with real demands. There is magic in building a platform but rarely does one come about by simply “offering some APIs” and even if it does, the chances it provides an economic base for third parties are slim. Tough stuff! Hence the reward.

Let's abstract that one more time. One of the wisest computer scientists I ever got to work with was the legend Butler Lampson (Xerox, MIT, Microsoft, etc) who once said, "All problems in computer science can be solved by another level of indirection" (the "fundamental theorem of software engineering” as it is known). There is truth to this—real truth. Two things on why this fails. First, often engineers know this ahead of time, so they put in abstractions in the architecture too soon. Windows NT is riddled with excess abstractions that were never really used primarily because they were there from the start before there was a real plan to use them. I would contrast this with Mac OS evolution where abstractions that seemed odd appeared useful two releases later because there was a plan. Second, abstractions added after the fact can become very messy to maintain, difficult to secure, and challenging to performance optimize. Because of that you end up with too much code that does not use the new abstraction. Then you have a maintenance headache.

Let's make that asynchronous. Most of the first 25 years of computer science was figuring out how to make things work asynchronously. If you were a graduate student in the 1980s you spent whole courses talking about dining philosophers or producers-consumers or sleeping barbers. Today’s world has mostly abstracted this problem away for most engineers who just operate by the rules at the data level. But at the user experience level there remains a desire to try to get more stuff done and never have people wait. Web frameworks have done great work to abstract this. But 9 out of 10 times once you go outside a framework or the data layer and think you can manage asynchrony yourself, you’ll do great except for the bug that will show up a year from now that you will never be able to reproduce. Hopefully it won’t be a data corruption issue, but I warned you.

Let's just add access controls later. When we weren’t talking about philosophers using chopsticks in grad school we were debating where exactly in a system access control should be. Today’s world is vastly more complex than the days of theoretical debates about access control because systems are under constant attack. Of course everyone knows systems need to be secure from the get-go, yet the pace to get to market means almost no system has fully thought through the access control/security model from the start. There’s almost no way to get the design of access controls to a product right unless you are thinking of that from the customer and adversary perspective from the start. No matter how expeditious it might feel, you will either fail or need to rewrite the product down the road and that will be a horrible experience for everyone including customers.

Let's just sync the data. In this world of multiple devices, SaaS apps, or data stores it is super common to hear someone chime in “why don’t we just sync the data”? Ha. @ROzzie (Ray Ozzie) who got his start on the Plato product, invented Lotus Notes, as well as Groove and Talko, and led the formation of Microsoft Azure was a pioneer in client/server and data sync. His words of wisdom, “synchronization is a hard problem”. And in computer science a hard problem means it is super difficult and fraught with challenges that can only be learned by experience. This problem is difficult enough with a full semantic and transacted data store, but once it gets to synchronizing blobs or unstructured data or worse involves data translation of some kind, then it very quickly becomes enormously difficult. Almost never do you want to base a solution on synchronizing data. This is why there are multi-billion dollar companies that do sync.

Let's make it cross-platform. I have been having this debate my whole computing life. Every time it comes up someone shows me something that they wrote that they believe works “great” cross platform, or someone tells me about Unity and games. Really clever people think that they can just say “the web”. I get that but I’m still right :-) When you commit to making something cross platform, no matter how customer focused and good your intentions are, you are committing to build an operating system, a cloud provider, or a browser. As much as you think you’re building your own thing, by committing to cross-platform you are essentially building one of those by just “adding a level of indirection” (see above by Butler Lampson). You think you can just make a pluggable platform (see above). The repeated reality of cross-platform is that it works well two times. It works when platforms are new—when the cloud was compute and simple storage for example—and then being an abstraction across two players doing that simple thing makes sense. It works when your application/product is new and simple. Both of those fail as you diverge from the underlying platform or as you build capabilities that are expressed wildly differently on each target. A most “famous” example for me is when Microsoft gave up on building Mac software precisely because it became too difficult to make Office for Mac and Windows from the same code—realize Microsoft essentially existed because it made its business building cross-platform apps. That worked when an OS API was 100 pages of docs, and each OS was derived from CP/M. We forked the Office code in 1998 and never looked back. Every day I use Mac Office I can see how even today it remains impossible to do a great job across platforms. You want more of my view on this please see -> https://medium.learningbyshipping.com/divergent-thoughts-on-cross-platform-updated-68a925a45a83

Let's just enable escape to native. Since cross-platform only works for a brief time one of the most common solutions frameworks and API abstractions offer is the ability to “escape to native. The idea is that the platform evolved or added features that the framework/abstraction doesn’t (yet?) expose, presumably because it has to build a whole implementation for the other targets that don’t yet have the capability. This really sounds great on paper. It too never works, more than 9 out of 10 times. The reason is pretty simple. The framework or API you are using that abstracts out some native capability always maintains some state or a cache of what is going on within the abstraction it created. When you call the underlying native platform, you muck with data structures and state that the framework doesn’t know about. Many frameworks provide elaborate mechanisms to exchange data or state information from your “escape to native” code back to the framework. That can work a little bit but in a world of automatic memory management is a solution akin to malloc/free and I am certain no one today would argue for that architecture :-)

Have I always been a strong “no” on all of these? Of course not. Can you choose these approaches, and they work? Yes, of course you can. There’s always some context where these might work, but most of the time you just don’t need them and there’s a better way. Always solve with first principles and don’t just to a software pattern that is so failure prone.

—Steven

I loved this list! Is there a counter-example/outlier from this list from your career where you were like, "I can't believe that worked as well as it did!"

> I would contrast this with Mac OS evolution where abstractions that seemed odd appeared useful two releases later because there was a plan.

Interesting. I was born a bit too late to experience the heyday of OS evolution in the 1990s-2000s. Would love to read more about what kinds of abstractions Apple got right.