220. Are AI Expectations Too High or Misplaced?

Have expectations for AI been getting too high? Not high enough? Thinking about how AI has evolved in past boom/bust cycles.

LLMs and Search

The idea that the current state of the art LLMs would simply replace search might go down as one of most premature or potentially misguided strategy “blunders” in a long time. Check out this example below, though it is just one of an endless set of places where LLMs convincingly provide an incorrect answer.

There was nothing about what chatGPT was doing at launch (or now) that would have indicated using it as a replacement for search would make sense, even though it had the look that it might. You can’t ask an oracle designed without a basis in facts, questions you don’t know the answer to and just take them at face value because “they sound good.” Since they sound so definitive and the chat interface will give a great sounding answer to most any legit question, it is left as an exercise to the prompter to then google for verification. Almost literally “The Media Equation” reality.

That chat gives different answers to different people or at different times should immediately jump out as a feature of a different use case, not a bug in search.

The butterfly effect of this mistake is incredible. It directed so many efforts away from the amazing strengths of LLMs, made regulators take note of a technology that would “disrupt” google (so it must be important), and implied anything so powerful needed immediate regulation. As hallucinations (I seriously dislike the use of an anthropomorphism) became routine even more focus on regulation was needed because we obviously can’t have wrong answers on the internet. Fears of LLMs designing chemical weapons or instructing people how to do dangerous things, when it could send you on the wrong path to bake cookies were peak over-fitting of concerns. The rush to overtake Google with LLMs put the news and entertainment industries on high alert given their past experiences and with that a rash of copyright litigation.

At the same time it meant people were not focused on the creative and expressive power of the technology and doing what it did so uniquely well. Worse those seemed pedestrian use cases compared to the grand vision of replacing whole parts of the economy.

It reminds me of when Bing let “Sydney” out and it was even better than we could have hoped for creativity. But then the panic set in and now here we are. Sydney is gone. Bing is using OpenAI for search results. Google is in a panic and rushed to inject Gemini into search results.

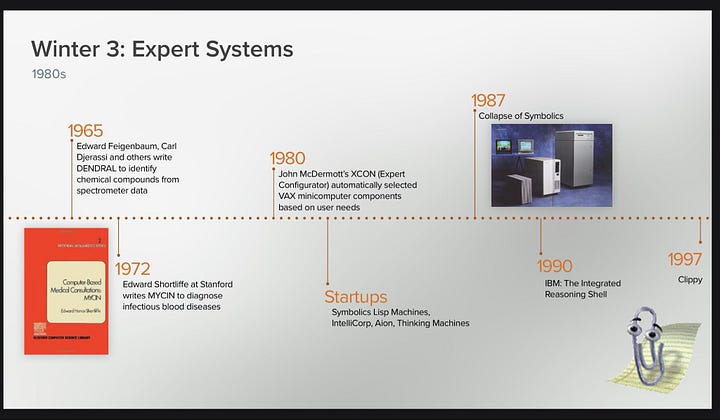

It feels like when expert systems came around in the 1980s and the early use cases touted were simple things like curing cancer or diagnosing diseases, which were just life or death matters in what were already highly uncertain contexts. Just the wrong domain too soon for what was a novel and innovative technology. What are expert systems technologies up to these days?

Here’s a perspective from me from February 2023 at the time of GPT3 and the “release” of Sydney, AI, ChatGPT, and Bing…Oh My.

So weird we are years into this and still in the same spot, only much more distracted with a crazy focus on potential for things LLMs have not made a lot of progress at doing.

It might very well be the case (I certainly hope so, for example you.com is making progress all the time) that invention and innovation will move the technology forward and introduce fact-fullness to LLMs (at a reasonable cost to develop and consume). In that case it this will be just another case in tech history where early is cool, but turns out to be the wrong time/approach/tech.

It might be the case that more compute, more data, more training, and so on will break through. Smart people in the field though are doubtful that “more of the same” will lead to a breakthrough in fact-based answers.

On the other hand, until we have that invention and innovation it might just be that trying to use LLMs for search will be what causes the next AI winter, just as machine translation or expert systems caused previous winters. That would be a bummer. The expectations are so high for LLMs to disrupt Google, replace search, and reinvent research that anything less than that will be billed as disappointing.

I think it is legitimate to start asking if we’re pointed in the right place.

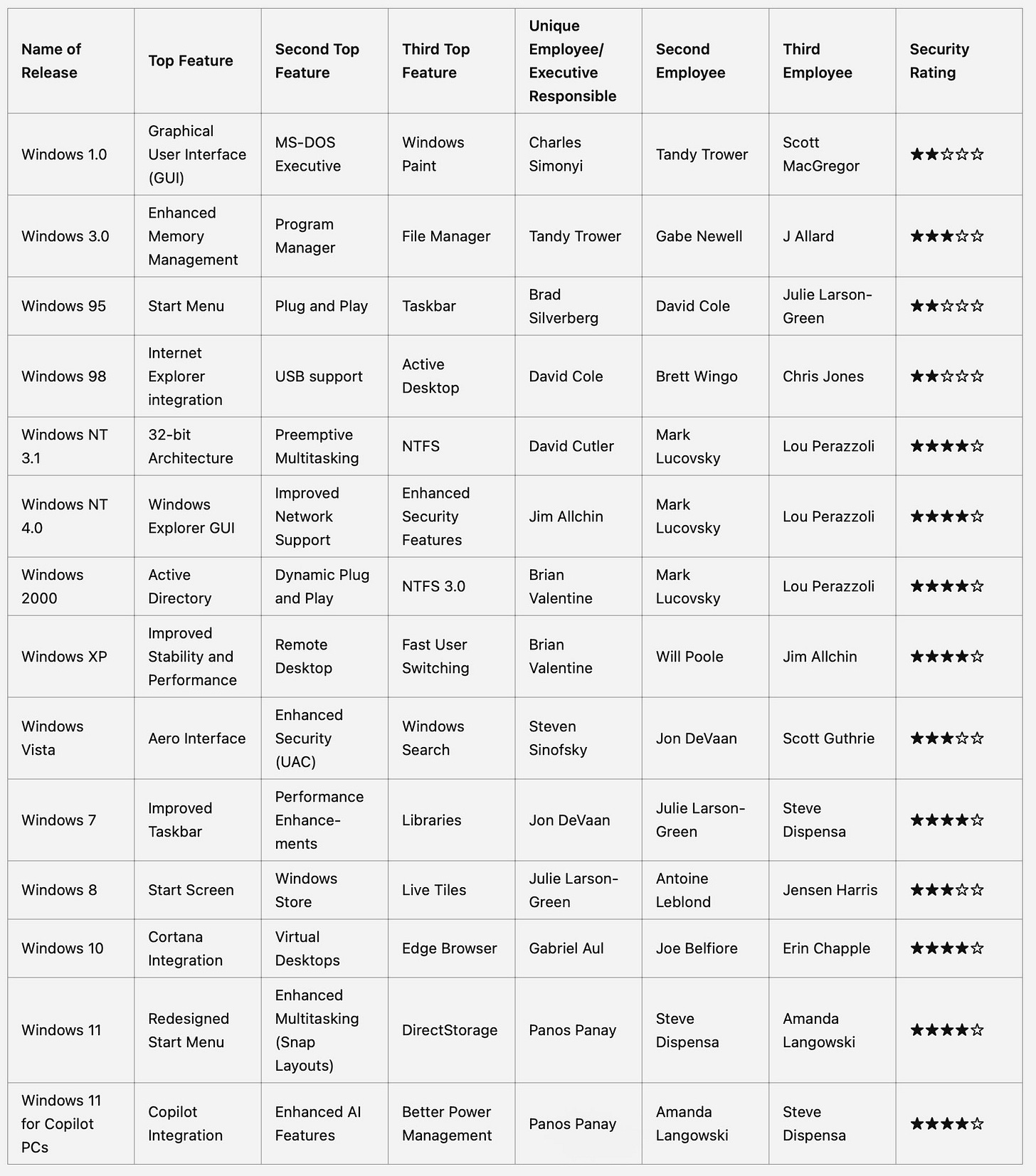

The following are two examples of a simple prompt someone sent me in reply to an original posting on X, showing how the latest ChatGPT and Perplexity, the latter is often cited as providing more truthful answers. In the results, which are presenting a rather convincing format, there are people who don’t exist and others that never worked on Windows, as well as features that seem arbitrarily elevated. I can guess that the names come from people who either wrote or spoke a good deal. The features might have been ones that generated support or other tech press chatter. I have no idea how security was rated.

This is a way of asking if LLMs today represent a potential induce another “AI winter” as a new technology that was broadly seen as taking us to the next general level of AI.

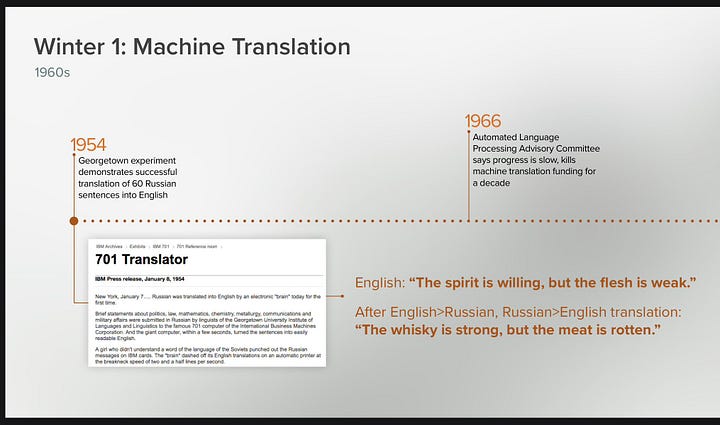

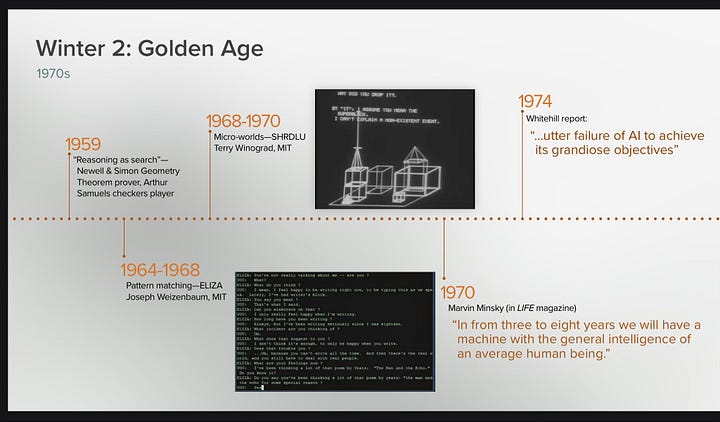

AI Winters

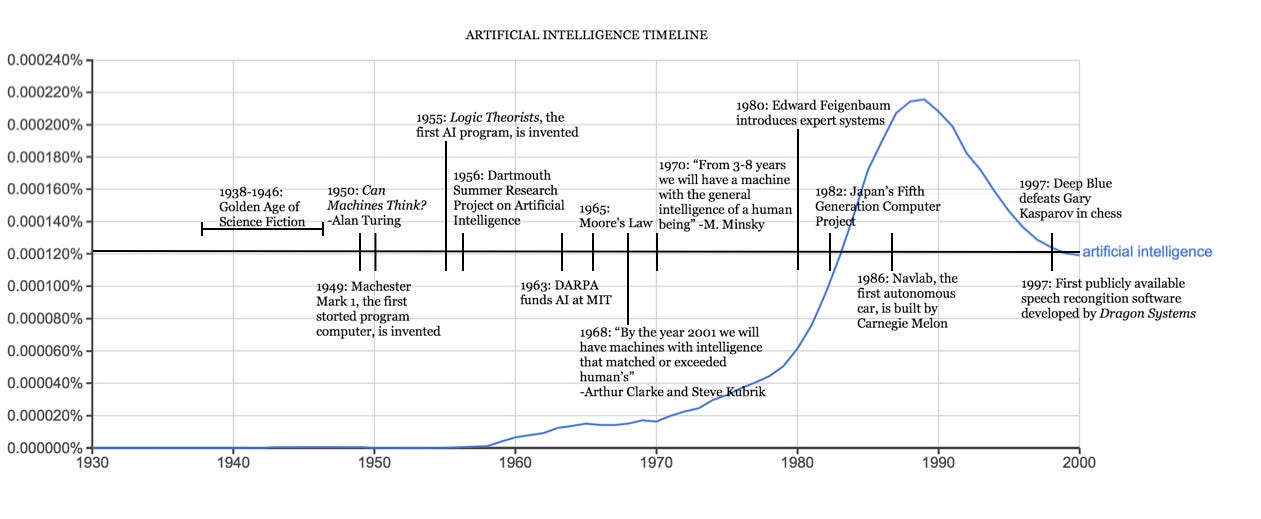

AI has progressed for decades through step-function innovation—big huge inventions followed by long periods of stasis. That’s different than the exponential curves that we think of with hardware, the more linear improvements in business software, or the hit-driven consumer world. There’s been a lot of long term research, so-called “AI winters,” and then “moments” of productization.

When one of these productization moments happens it is heralded at first as an advance of AI. Then almost in a blink no one thinks of those innovations as AI anymore. They simply “are.” The world resets around a new normal very quickly and what’s new is just cool but rarely has it been referred to as AI. This is the old saying “as soon as it works, it is no longer AI.”

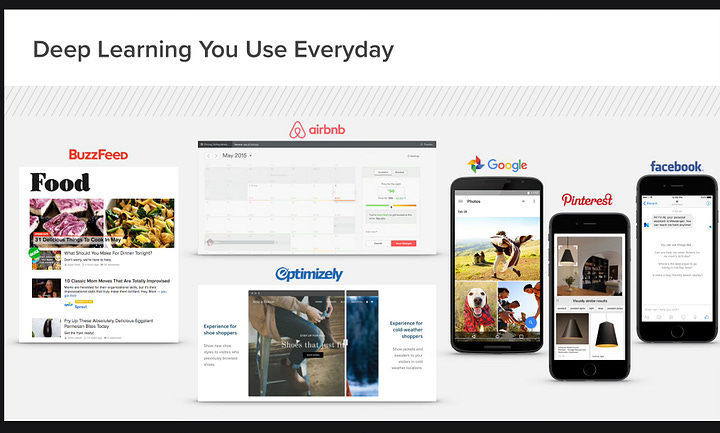

We don’t think of the decades of research behind maps directions/routing, handwriting, spelling and grammar, image recognition, matching that happens everywhere from Airbnb to Bumble, or even more recent photo enhancements as AI as much as just “new features that work.”

This chart below from “The History of Artificial Intelligence” provides a view of the roller coaster that has been AI along with the major advances. The article is a short history of these major advances and worth a look.

I think in a short time we will look at features like summarization, rewriting, templates broadly, adding still photos or perhaps video clips, even whole draft documents as nothing more than new features and hardly a mention of AI, except perhaps marketing :-)

Why is AI like this? Kind of interesting. Is it that AI really is an ingredient technology and always gets surrounded by more domain/scenario code? Is it that AI itself is an enabler that has many implementations and points people in a direction? Is it because the technology is abstract enough that it defies clear articulation? When you compare it to other major technology waves, it just seems to keep happening. Maybe this time is a little different because so much focus has been placed on hardware, GPUs, TOPS, custom chips. I wonder if that will make a clearer demarcation of “new” but I’m not so sure.

A couple of slides from almost 10 years ago that Frank Chen and I made for a lunchtime talk. Winters and reality.

Another thing about the history of AI is how at each step of innovation there was a huge amount of extrapolation about all the things a specific advance will lead to. But things don’t play out that way.

This is a lot like medical research (and many innovations in AI were in medicine, like expert systems.) Each discovery of a gene or mechanism will lead to a cure, but the extrapolation proves faulty.

Innovations in AI have been enormous but that also haven’t generalized to the degree envisioned in the early days.

Perhaps, one way this could play out is that LLMs remain excellent as creative tools and generators of language, but do not make progress on truth or factualness. The chosen domains for applying LLM will remain where they are strong today and don’t generalize that much more.

The question worth discussing is just where are we on the journey to an AI Winter or AGI? Sure we’re in the middle of those extremes, but which way are we tilting?

—Steven

These were originally posted on X and edited here for completeness and clarity.

LLM - per Copilot/Chat GPT 4, Large Language Model (LLM): This refers to a type of artificial intelligence model that is capable of understanding and generating human language text. Large Language Models are trained on vast amounts of text data and can perform a variety of natural language processing tasks, such as text generation, classification, and more

I appreciated this post as much for your explanation as I did for you setting out the historical context of the journey. Really helpful.