101. Reimagining Windows from the Chipset to the Experience: The Chipset [Ch. XV]

“Paul, you’re not going to view this favorably.” —Steve Ballmer to Intel CEO Paul Otellini as we described over the phone our intent to offer Windows 8 on ARM processors

Welcome to Chapter XV! This is the final chapter of Hardcore Software. In this chapter, we are going to build and release Windows 8—reimagining Windows from the chipset to the experience. First up, the chipset. Then there will be sections on the platform and the experience. Following that, we’ll release Windows to developers and then the public. Then a surprise release of…Surface. There is a ton to cover. Many readers have lived through this. I’m definitely including a lot of detail but chose not to break things up into small posts. There are subsection breaks though.

This first section covers the chipset work—moving Windows to the ARM SoC. Before diving right in, I will quickly describe the team structure and calendar of events that we will follow, both of which provide the structure to this final chapter while illustrating the scope of the effort.

Back to 100. A Daring and Bold Vision

-----

Windows is already a big project, but what we did with Windows 8 was to finally get back to Microsoft roots and instead of merely a product release we aimed to make Windows 8 an industry release and certainly a product of all of Microsoft. From every aspect of the ecosystem and for each of our main customer segments we worked to garner significant support and alignment with Windows 8. We built a vision with that in mind. We organized the team to build that vision. We created a calendar of public milestones to deliver it all to the market.

Organizing for Windows 8

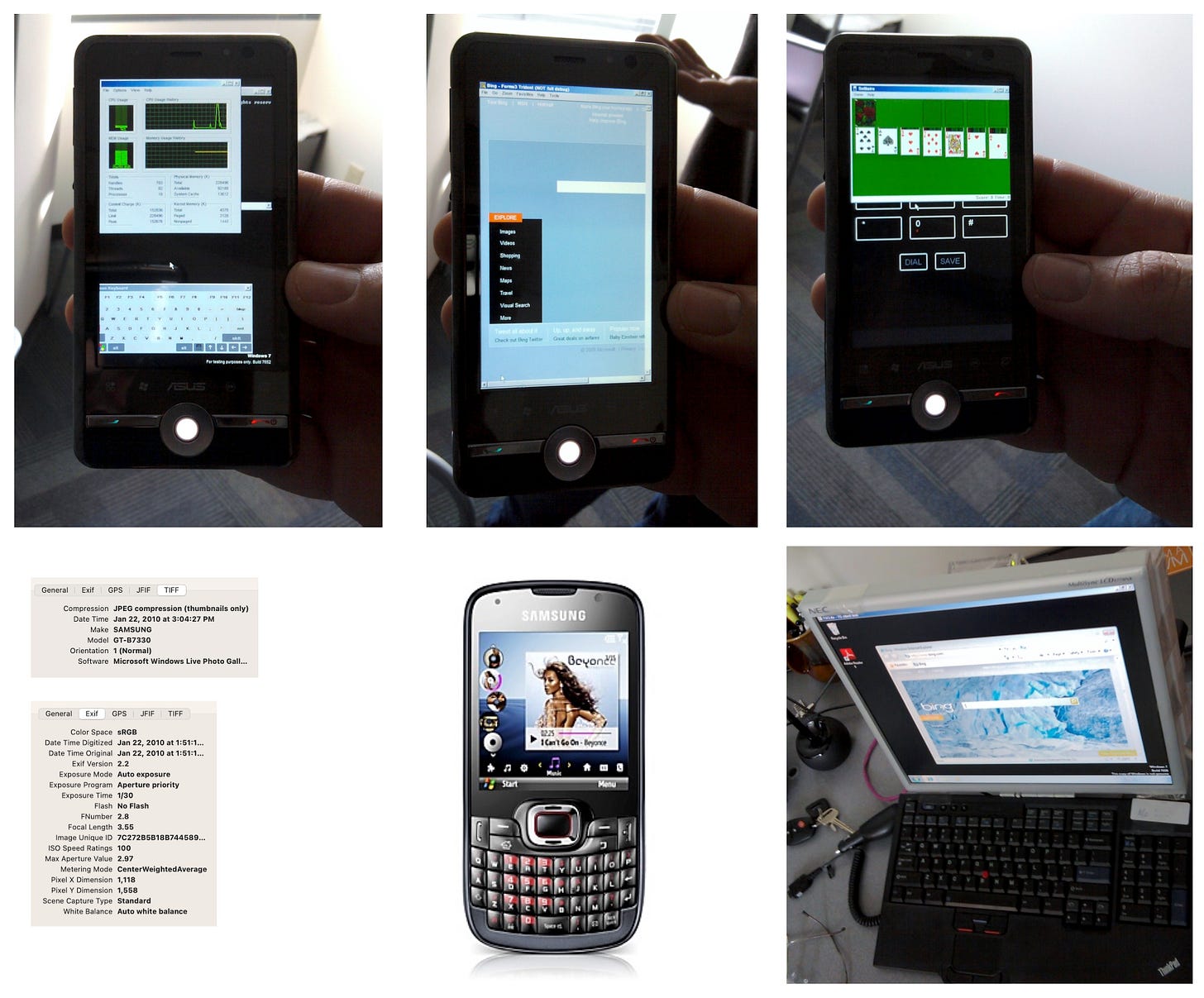

We restructured the team in mid-2009 just before Windows 7 was generally available. It was a complete shift to a functional or discipline-oriented team for Windows and Windows Live services. Internet Explorer with its unique position as both a platform and an application that also worked on older versions of Windows was a separate product unit. We organized about 6000 full time product development team members (about 2000 software development engineers) into 55 different feature teams that had on average 35 software engineers and corresponding software test engineers and program managers.

To scale, we created a new management layer, “the directors.” The way I thought of this was from a college hire perspective. A college hire (in development, testing, pm) had a line/lead manager, reporting to a group manager (the 55 teams, each with 5-8 leads), who reported to a director each with 4-7 teams, who reported to the VP of Development, Testing, or Program Management. We also had large teams for creating content and localizing both it and the product, product design and usability, product planning and research, the ecosystem which had engineering as well as partnership, marketing and communications, finance, and soon a hardware team. It was as flat and wide an organization we could come up with. It was quite a long way from when we thought the Word team with 55 developers was the biggest team we’d ever have on one product or the Microsoft Foundation Classes with 4 developers including the manager.

![PRODUCT 2 LIVE 3 LIVE 4 LIVE 5 LIVE 6 LIVE 7 LIVE 8 LIVE 9 LIVE 10 LIVE 11 LIVE 12 LIVE 13 LIVE 14 LIVE 15 LIVE 16 WIN 17 WIN 18 WIN 19 WIN 20 WIN 21 WIN 22 WIN 23 WIN 24 WIN 25 WIN 26 WIN 27 WIN 28 WIN 29 WIN 30 WIN 31 WIN 32 WIN 33 WIN 34 WIN 35 WIN 36 WIN 37 WIN 38 WIN 39 WIN 40 WIN 41 WIN 42 WIN 43 WIN 44 WIN 45 WIN 46 WIN 47 WIN 48 WIN 49 WIN 50 WIN 51 WIN 52 WIN 53 WIN 54 WIN 55 WIN 56 WIN 57 WIN 58 WIN 59 WIN 60 WIN 61 WIN 62 WIN 63 WIN 64 WIN 65 WIN 66 WIN 67 WIN 68 WIN 69 WIN 70 WIN 71 WIN 72 WIN 73 WIN 74 WIN GROUP WLX WLX WLX WLX WLX WLX WLX WLX WLX WLX LPS LPS LPS LPS | Team Management Desktop Communications (DC) Web Communications/SVC (WC) Mobile Experiences (SH) Social Networking (SN) Digital Memories Experience (DMX) Shared Data Experience (SDX) Customer Experience and Platform (CXP) Safety Platform (SP) Planning Management Cloud Directory Platform (CDP) Mesh & Developer Platform (MDP) Storage & Communications Platform (SCP) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Performance (PERF) Reliability, Security, and Privacy (RESP) Telemetry (TEL) Licensing and Deployment (LAD) Ecosystem Fundamentals (ECO) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Networking Core (NET) Wireless and Networking services (NWS) Device Connectivity (DCON) Devices & Networking Experience (DNX) Hardware Developer Experience (HDX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) Core Experience Evolved (CXE) Global and Differentiated Experience (GDX) In Control of Your PC (ICP) Search, View, and Command (SVC) You-Centered Experience (YOU) Applications and Media Experience Team (AMEX) Globalization and Loc Quality Windows Core (COR) Windows Core (COR) Windows Core (COR) Windows Core (COR) Windows Core (COR) Kernel Platform Group (KPG) Hyper-V (HYPV) Storage & Files Systems (SFS) Security & Identity (SID) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Graphics Platform (GRFX) Media Platform (MPT) Apps Experience (APPX) Runtime Experience (REX) Presentation and Composition (PAC) Human Interaction Platform (HIP) Engineering System and Compat Teams (ESC) Engineering System and Compat Teams (ESC) Engineer Desktop (END) Engineering System and Compat Teams (ESC) Test Automation & Gates Infrastructure (TAG) Engineering System and Compat Teams (ESC) Build, Code Movement, and Deployment (BCD) Engineering System and Compat Teams (ESC) International, Metrics and Planning (IMaP) Engineering System and Compat Teams (ESC) App Compat and Device Compat (ACDC) Engineering System and Compat Teams (ESC) App Compat and Device Compat (ACDC) Engineering System and Compat Teams (ESC) Engineering and Release Services (EARS) Engineering System and Compat Teams (ESC) Operations and Support (OPS Engineering System and Compat Teams (ESC) Test Execution (TEX) Web Services and Content (WSC) Web Services and Content (WSC) Web Services and Content (WSC) Web Services and Content (WSC) Windows Online (WOL) App Store (APPS) Web Shared Services (WSS) India Development Center (IDC) India Development Center (IDC) India Development Center (I DC) India Development Center (IDC) India Development Center (I DC) India Development Center (IDC) Internet Explorer Internet Explorer wSC Sustaining Engineering (WINSE) WSC XDR Wintage Client Virtualization Networking Win SE Windows Live Management Feature Area Localization Sustaining Engineering Content Design and Usability Planning and PC Ecosystem D SDE H/C 351 47 84 25 36 45 34 48 32 SDET H/C 156 56 36 64 199 46 34 40 44 35 225 46 42 52 39 46 189 35 28 27 28 37 21 203 52 48 52 51 228 53 36 38 20 44 37 129 40 10 26 22 31 77 29 д0 8 165 24 23 34 20 88 88 5 76 323 48 77 26 33 36 32 42 29 G H PM H/С SDE ]SDET [PM 204 stevel arthurdh chrisjo 26 dannygl laurent gsierra 42 philsm sbabu richcrad 21 johnnyli yegu 18 gregfri 19 tomaszk 17 sbailey 37 jimh 24 krisiv bradth rogerbi rickz sadikd umass jeffku bradwe mguittet jscarrow 143 56 31 56 192 41 34 39 42 36 222 45 43 51 37 46 244 36 35 26 29 39 34 45 201 47 49 53 52 222 50 35 35 19 44 39 225 24 55 14 12 66 25 8 20 70 28 39 3 261 19 21 36 164 21 105 105 18 161 EGM johnnyli jennam davidtr 60 19 mikeguo sshah rmanne 16 raymonde imrans billdev 25 mikezin bartonp rvarun 107 mrfortin bambos gabea 20 njain mdesai billkar 22 vinceo tvoellm jasong 25 sanjeevk gigela cameront 21 caglarg bradwill mbeck 19 stelo elliotm mattsti 113 chuckc scotther dennisfl 28 andrewr sgoble sandeas 17 khawarz sethh billya 24 arvindm mnassiri jackmayo 23 myronth crzhou robingo 21 bobri cwjones riche 118 jeffio jcable jensenh 30 kipo derekl alicepr 20 yslin richow kipknox 14 mraghu tomwhite ryanha 14 gerhas mraschko manavm 20 stevesei luisu katief 20 martinth carlb cwilli karend 85 erictr gregchap iainmc 20 reves rickb deande 15 douglasw sudheerv bdewey 22 sverma aravindk rajeevn 28 skavsan raikm dustini 115 alesh yvesn lindaav 24 anujg toddf robertco 18 naveent farishat scoutman 21 kamenm metegokt ialegrow 10 martynl strowe maheshp 23 davebu rodnevbr anankan 19 snene jeffpi jerrykoh 97 mgammal craigfl alexsi 16 johnc juliemad devic 11 oipotter thorn 22 kirandor juliemad charle 16 albertt juliemad ccrane 19 clupu dhicks 5 clupu iamwhi iuliemad prioshi jamesb 41 daviddv davsmith cynthiat teddw 23 kbrown ericve CynthiaT 17 mikesch seano CynthiaT 1 fuvaul davsmith CynthiaT 55 saniavs pankajl srinicha anilb 9 chetanp mustafag kannar 8 charun shreeshd 12 vim anithab ajaygumm 20 srin 6 rajeshia srinicha 42 dhach 42 fergalb jasonu rmauceri 57 48 samseng jimrodr markli jcarroll julieb ulri mikeang PRODUCT 2 LIVE 3 LIVE 4 LIVE 5 LIVE 6 LIVE 7 LIVE 8 LIVE 9 LIVE 10 LIVE 11 LIVE 12 LIVE 13 LIVE 14 LIVE 15 LIVE 16 WIN 17 WIN 18 WIN 19 WIN 20 WIN 21 WIN 22 WIN 23 WIN 24 WIN 25 WIN 26 WIN 27 WIN 28 WIN 29 WIN 30 WIN 31 WIN 32 WIN 33 WIN 34 WIN 35 WIN 36 WIN 37 WIN 38 WIN 39 WIN 40 WIN 41 WIN 42 WIN 43 WIN 44 WIN 45 WIN 46 WIN 47 WIN 48 WIN 49 WIN 50 WIN 51 WIN 52 WIN 53 WIN 54 WIN 55 WIN 56 WIN 57 WIN 58 WIN 59 WIN 60 WIN 61 WIN 62 WIN 63 WIN 64 WIN 65 WIN 66 WIN 67 WIN 68 WIN 69 WIN 70 WIN 71 WIN 72 WIN 73 WIN 74 WIN GROUP WLX WLX WLX WLX WLX WLX WLX WLX WLX WLX LPS LPS LPS LPS | Team Management Desktop Communications (DC) Web Communications/SVC (WC) Mobile Experiences (SH) Social Networking (SN) Digital Memories Experience (DMX) Shared Data Experience (SDX) Customer Experience and Platform (CXP) Safety Platform (SP) Planning Management Cloud Directory Platform (CDP) Mesh & Developer Platform (MDP) Storage & Communications Platform (SCP) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Fundamentals (FUN) Performance (PERF) Reliability, Security, and Privacy (RESP) Telemetry (TEL) Licensing and Deployment (LAD) Ecosystem Fundamentals (ECO) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Devices and Networking Team (DNT) Networking Core (NET) Wireless and Networking services (NWS) Device Connectivity (DCON) Devices & Networking Experience (DNX) Hardware Developer Experience (HDX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) User Experience (UEX) Core Experience Evolved (CXE) Global and Differentiated Experience (GDX) In Control of Your PC (ICP) Search, View, and Command (SVC) You-Centered Experience (YOU) Applications and Media Experience Team (AMEX) Globalization and Loc Quality Windows Core (COR) Windows Core (COR) Windows Core (COR) Windows Core (COR) Windows Core (COR) Kernel Platform Group (KPG) Hyper-V (HYPV) Storage & Files Systems (SFS) Security & Identity (SID) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Developer Experience (DEVX) Graphics Platform (GRFX) Media Platform (MPT) Apps Experience (APPX) Runtime Experience (REX) Presentation and Composition (PAC) Human Interaction Platform (HIP) Engineering System and Compat Teams (ESC) Engineering System and Compat Teams (ESC) Engineer Desktop (END) Engineering System and Compat Teams (ESC) Test Automation & Gates Infrastructure (TAG) Engineering System and Compat Teams (ESC) Build, Code Movement, and Deployment (BCD) Engineering System and Compat Teams (ESC) International, Metrics and Planning (IMaP) Engineering System and Compat Teams (ESC) App Compat and Device Compat (ACDC) Engineering System and Compat Teams (ESC) App Compat and Device Compat (ACDC) Engineering System and Compat Teams (ESC) Engineering and Release Services (EARS) Engineering System and Compat Teams (ESC) Operations and Support (OPS Engineering System and Compat Teams (ESC) Test Execution (TEX) Web Services and Content (WSC) Web Services and Content (WSC) Web Services and Content (WSC) Web Services and Content (WSC) Windows Online (WOL) App Store (APPS) Web Shared Services (WSS) India Development Center (IDC) India Development Center (IDC) India Development Center (I DC) India Development Center (IDC) India Development Center (I DC) India Development Center (IDC) Internet Explorer Internet Explorer wSC Sustaining Engineering (WINSE) WSC XDR Wintage Client Virtualization Networking Win SE Windows Live Management Feature Area Localization Sustaining Engineering Content Design and Usability Planning and PC Ecosystem D SDE H/C 351 47 84 25 36 45 34 48 32 SDET H/C 156 56 36 64 199 46 34 40 44 35 225 46 42 52 39 46 189 35 28 27 28 37 21 203 52 48 52 51 228 53 36 38 20 44 37 129 40 10 26 22 31 77 29 д0 8 165 24 23 34 20 88 88 5 76 323 48 77 26 33 36 32 42 29 G H PM H/С SDE ]SDET [PM 204 stevel arthurdh chrisjo 26 dannygl laurent gsierra 42 philsm sbabu richcrad 21 johnnyli yegu 18 gregfri 19 tomaszk 17 sbailey 37 jimh 24 krisiv bradth rogerbi rickz sadikd umass jeffku bradwe mguittet jscarrow 143 56 31 56 192 41 34 39 42 36 222 45 43 51 37 46 244 36 35 26 29 39 34 45 201 47 49 53 52 222 50 35 35 19 44 39 225 24 55 14 12 66 25 8 20 70 28 39 3 261 19 21 36 164 21 105 105 18 161 EGM johnnyli jennam davidtr 60 19 mikeguo sshah rmanne 16 raymonde imrans billdev 25 mikezin bartonp rvarun 107 mrfortin bambos gabea 20 njain mdesai billkar 22 vinceo tvoellm jasong 25 sanjeevk gigela cameront 21 caglarg bradwill mbeck 19 stelo elliotm mattsti 113 chuckc scotther dennisfl 28 andrewr sgoble sandeas 17 khawarz sethh billya 24 arvindm mnassiri jackmayo 23 myronth crzhou robingo 21 bobri cwjones riche 118 jeffio jcable jensenh 30 kipo derekl alicepr 20 yslin richow kipknox 14 mraghu tomwhite ryanha 14 gerhas mraschko manavm 20 stevesei luisu katief 20 martinth carlb cwilli karend 85 erictr gregchap iainmc 20 reves rickb deande 15 douglasw sudheerv bdewey 22 sverma aravindk rajeevn 28 skavsan raikm dustini 115 alesh yvesn lindaav 24 anujg toddf robertco 18 naveent farishat scoutman 21 kamenm metegokt ialegrow 10 martynl strowe maheshp 23 davebu rodnevbr anankan 19 snene jeffpi jerrykoh 97 mgammal craigfl alexsi 16 johnc juliemad devic 11 oipotter thorn 22 kirandor juliemad charle 16 albertt juliemad ccrane 19 clupu dhicks 5 clupu iamwhi iuliemad prioshi jamesb 41 daviddv davsmith cynthiat teddw 23 kbrown ericve CynthiaT 17 mikesch seano CynthiaT 1 fuvaul davsmith CynthiaT 55 saniavs pankajl srinicha anilb 9 chetanp mustafag kannar 8 charun shreeshd 12 vim anithab ajaygumm 20 srin 6 rajeshia srinicha 42 dhach 42 fergalb jasonu rmauceri 57 48 samseng jimrodr markli jcarroll julieb ulri mikeang](https://substackcdn.com/image/fetch/$s_!q9pb!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F65477379-4529-456f-8444-64621ac0bb85_1680x2110.jpeg)

The organization proved remarkably effective and what would surprise most people, it was incredibly agile to massive unknowns and learning along the way. What I said with Windows 7 was always the case with Windows 8, which is even as of this writing I hear from people on a regular basis who talk about the work on the team being the best of their careers and most fulfilling. That means the world.

Public Disclosure and Events

It is easy to imagine Windows 8 happening as a chronology from the initial planning before Windows 7 shipped through to RTM, but that does not do justice to the scale of the effort and how much work happened in parallel. When we spoke of the “chipset to the experience” each of those pillars was both a huge effort by Microsoft to engineer the product and a massive contribution from ecosystem partners to complete the delivery.

Tami Reller (TReller) the corporate vice president of marketing and finance for Windows presented a slide at the end of the project at our all-hands Company Meeting where she outlined the major milestones for Windows 8. These external milestones are on top of the engineering led milestones, M1, M2, M3, Beta, RTM. There were 10 external events for Windows 8, each a major outreach included participation from partners representing a particular aspect of the ecosystem. That’s a lot of events for one product. It was another era. While it helps to think of them happening serially, all of what will be described took place in parallel.

These events help to organize the sections that follow: chipset, platform, experience, and the ecosystem. In each of the following sections, we’ll follow various events—the internal workings and the public events—that took place from January 2011 through the final product launch in October 2012. The technologists reading along can appreciate this approach as we traverse the project from layer to layer. Those with a marketing perspective will appreciate the journey by segment or channel.

We will dive into product details quite a bit—the highs and the lows as they say. In a blog post on Windows 8 just after the Consumer Preview milestone we described the goals we intended to achieve for the product experience. These were a distillation of the product vision as we iterated on and completed the scheduled feature set. Each of the sections that follow apply to some of these goals but do keep them in mind as they guided the whole project:

Fast and fluid

Touch-first (but not only touch)

Long battery life

Grace and power of Windows 8 apps

Live tiles make it personal

Apps working together to save you time

Roam your experience between PCs

Make your PC work like a device, not a computer

Chipset

The journey from Intel to also supporting ARM was one of the more extraordinary technical achievements in scope and execution by the reinvigorated Windows team. The implications of the work were on two levels. First, the immediate challenges were within the existing ecosystem, starting with Intel. Second, we were literally resetting the PC platform while in flight and doing so required a platform evangelism success on par with the original success Windows achieved in the late 1980s.

I hated that throughout the planning of Windows 8 described in the previous section, right up through the team vision meeting, we were, or specifically, I was uncertain over whether we would be able to make the right big and bold bet on ARM. It was so much work.

The Demo

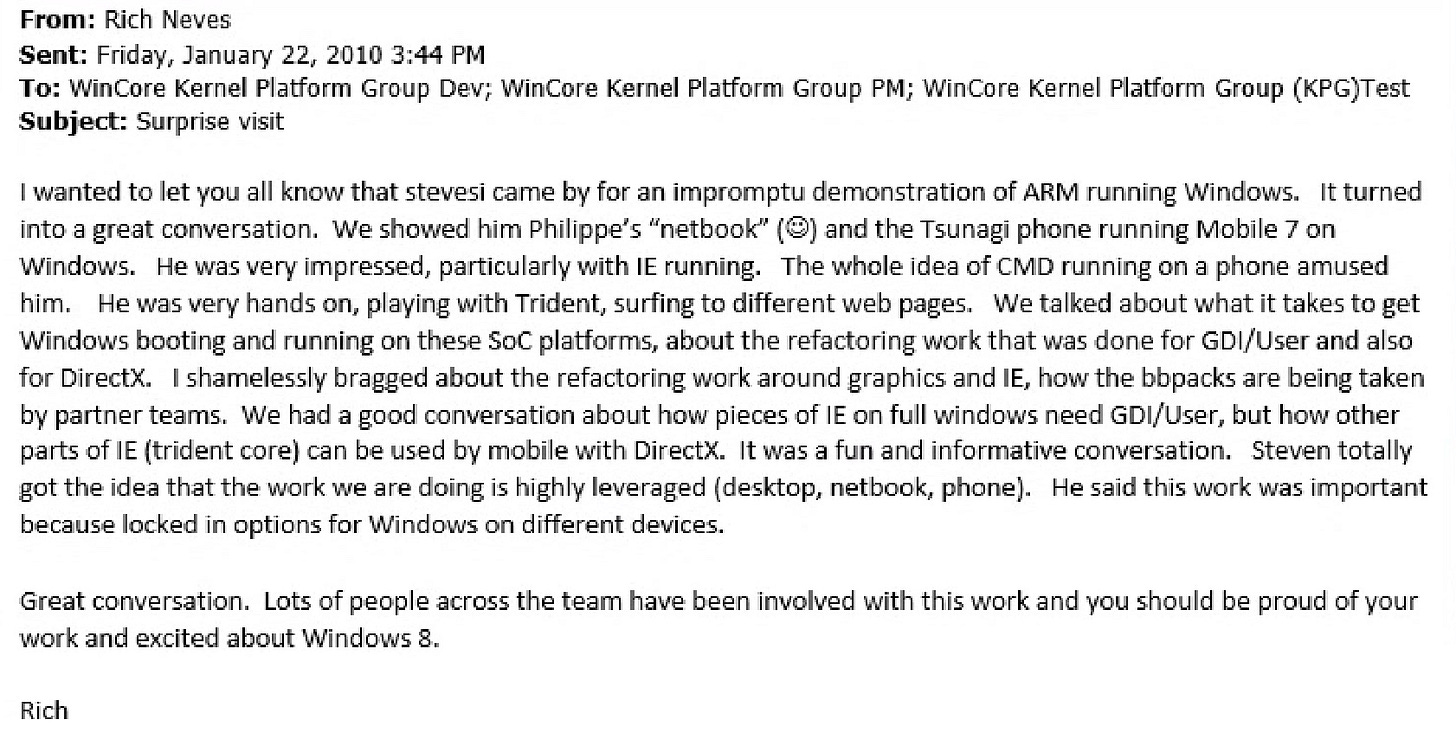

On January 22, 2010, I walked over to Rich Neves’ (RNeves) office in the former COSD buildings of the core Windows NT team—these buildings were built just after the Apps campus and were where much of the Windows and Server teams were housed. The Kernel Platform Group (KPG) team had been hard at work on the port to ARM for months—calling it a port is too small, as every major subsystem of Windows required deep architectural innovations—it was a major refactoring of the Windows code base, much of which began under the guise of the previously mentioned MinWin. One week before the iPad announcement, Rich and the team asked me to stop by their hallway for a look at what they had been up to.

These buildings were much larger and a different design than the WEX buildings—the traditional Northwest wood construction had been replaced by exposed and painted metal beams and glass in these more recent buildings. I made my way up to a conference-room-turned-lab, where at least six members of the team waited to anxiously show off the progress. They were anxious because the progress was hard to believe. And frankly, we just hadn’t had that many interactions as I had only been their official manager for a short time and in the Windows culture, executives visiting offices was not at all typical.

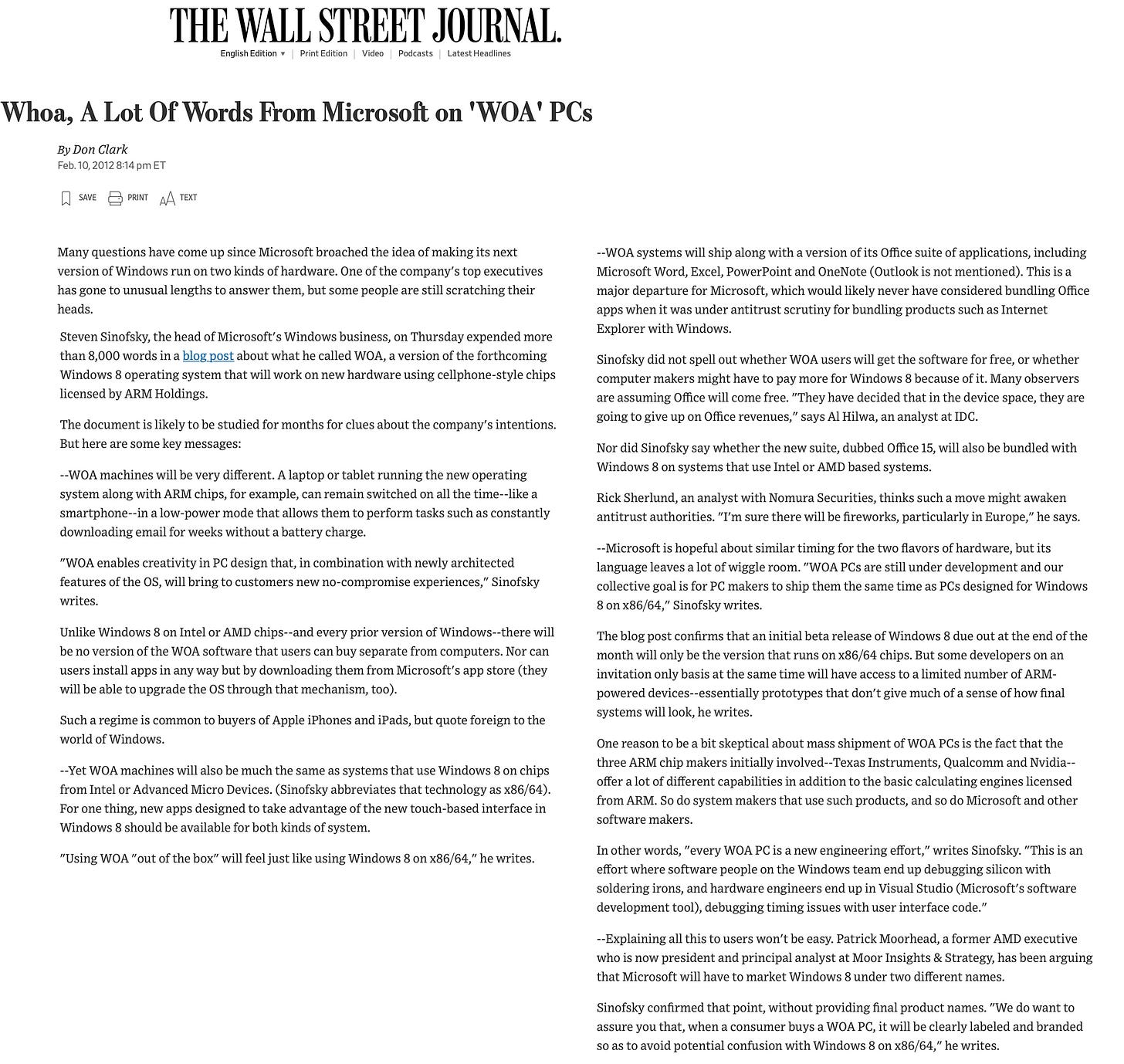

They showed me Windows 7 running natively on an ARM-based phone that was designed for Windows Mobile. Actual Windows 7. All of Windows 7. Windows Mobile at the time was built on a subset of the ancient 16-bit and 32-bit Windows code. They were running the modern Windows NT code base on a phone with an ARM chipset. It was nuts. It wasn’t an old-school demo of the kernel or a command line, but the Task Manager, Solitaire, even the on-screen keyboard used for touch, and a command window (this was still the core OS team after all.)

I was speechless. I was incredibly impressed with the team and the work they had accomplished so early in the product cycle—we had not even completed the vision. It was a huge boost to the confidence in our plans. While I insisted we remain opaque and secretive about the work as the full vision came together, this visit made it clear we were on our way.

I took some photos of the screens with my Samsung Windows Mobile 6.5 phone. Two years later I used them in a Windows on ARM blog post described below, intentionally leaving the original embedded timestamps of the afternoon January 22, 2010, which the internet quickly discovered. That was just after the iPad launch and, for those who cared, it let them know we were working on tablets and ARM much longer than thought and were clearly serious. I know that hardly matters in the scheme of things, but it always made me feel better when we were accused of following in the wake of Apple.

Even the best porting projects—moving from one platform to another, in this case Intel to ARM—have a weird effect. Visible progress is quick, but the last 10 percent of the work takes 90 percent of the effort. There was always a risk that going from early demos to a product would become nearly impossible. The core OS team had the collective experience to know that, in this case, this was no parlor trick. Over the next year the whole of the division and much of Microsoft would be doing its part to bring the full Windows experience to ARM chips.

Rich sent a wonderful mail to the team after the demo. It was the start of something really great.

The question: What would this mean as a product? The answer required a different perspective.

What Does ARM Really Mean?

Windows engineering leadership, leaders of the former COSD organization, had significant experience in porting Windows—translating the code from one chipset architecture to another—across numerous architectures. That is why this was not as outside of the box as many perceived.

In fact, Windows NT code was designed from the start to run on many chipsets—the team was never particularly enamored of the Intel architecture. Porting Windows from 32- to 64-bit chipsets was one of the most contentious times in the Intel-Microsoft relationship when the Windows team essentially sided with Intel rival AMD in the definition of 64-bit Windows—those “AMD64” references in the source code drove Intel crazy. On the other hand, the team spent a good deal of energy porting to chipsets, such as MIPS and DEC Alpha that proved to have no business case but yielded improvements in the architecture, making future ports easier. While porting Windows to ARM involved the whole team, the core OS kernel team drove the project and provided the deep technical leadership and expertise required to make it happen.

This new cross-platform effort was a break from Intel of significant proportions, the implications of which were important to the Future of Windows.

It is worth noting the long history of cross-platform development at Microsoft. Many believe Microsoft was always wedded to Intel, and certainly from the advent of the IBM Personal Computer in 1981 that was obviously the case for operating systems. As described, the Apps or Office team had been committed to Macintosh heavily since that product was under development, and it wasn’t until 1997 that Macintosh apps were even split from the main Windows Office development team. Before the IBM PC, the origins of Microsoft go back to providing BASIC and early apps such as Multiplan on a wide range of micro-computers running any number of operating systems. My own very first project was to develop the cross-platform libraries that emulated the Windows 16-bit APIs on Macintosh to ease cross-platform development. That project ultimately led to the poorly received Word 6.0 for Mac and some valuable lessons for me. Microsoft seemed to have a good track record for knowing when to ebb and flow with platforms. From the start the original Windows CE and Mobile efforts were on non-Intel chipsets.

The problem was we were now in astronomically deep with Intel and the entire ecosystem of PC makers, development tools, peripherals, and a constellation of vendors and manufacturers all of whom were integral to Wintel. It was not like the early 1990s when it was viewed by the outside world as a minor side project for Windows NT to work on some fringe chipsets.

Even if, and that was still not certain, we had all of Windows running on ARM chips, we still needed support for all the peripherals and devices, companies to make the chips and computers, and software development tools and support up and down the stack of features. Microsoft had long ago become reliant on the ecosystem for much of this work. Over the past ten years or so we had increasingly included support within Windows itself for common peripherals such as keyboards, mice, some displays, various forms of storage, and mainstream printers, and with Windows 7 we started supporting some sensors and trackpads. The ecosystem, however, still contributed a lot of code required for a basic PC. That was an asset through Windows 7 and would continue with Windows 8 for Intel. For ARM, however, much more of this would need to just work and Microsoft would need to deliver these capabilities.

Cross-platform development is inherently seductive to technologists in how straight-forward it should be, highly desirable by business leaders who value the optionality, and easily a requirement by enterprise customers who always insist on the diversity of their needs. The odd thing about cross-platform is that no single customer really cares because they generally have a single computer. BUT, when it comes to the software, all it takes is one application that one customer needs and then the whole effort can fall apart. This was especially, and overwhelmingly, an issue for Windows which took enormous pride (and economic benefit) from running nearly every program ever written for Windows even on Windows 7.

While the porting effort would continue, the issues above in just making Windows work were enormous.

SoC was both a generic term and a specific term. For Windows 8 when speaking internally SoC meant ARM, the chips manufactured to the specifications designed by ARM Holdings, the then British company that created ARM. Unlike Intel, ARM designs chips but does not manufacture them. Companies license ARM designs and intellectual property, which they can customize, and then either manufacture the chips themselves or contract a manufacturer like TSMC, Taiwan Semiconductor Manufacturing Company. Texas Instruments, NVIDIA, and Qualcomm also license ARM and offer designs manufactured in house, or by other chip makers across southeast Asia. Intel, as they often reminded me, held an ARM license. Apple licensed ARM designs and customized them.

Intel had a SoC as well, known as ATOM, the chips that were used in Netbooks. Strictly speaking, the ATOM SoC was a system-on-a-chip in that the main components were in one chip. ATOM, however, came to rely on the existing PC architecture and was entirely compatible with all of existing Windows and so, as far as Windows was concerned, it was a standard Wintel PC. Whenever we talked about SoC, we included Intel, though from a product and engineering perspective, Intel’s efforts to make a SoC competitive with ARM was a longer-term goal, one which Intel never achieved, unfortunately.

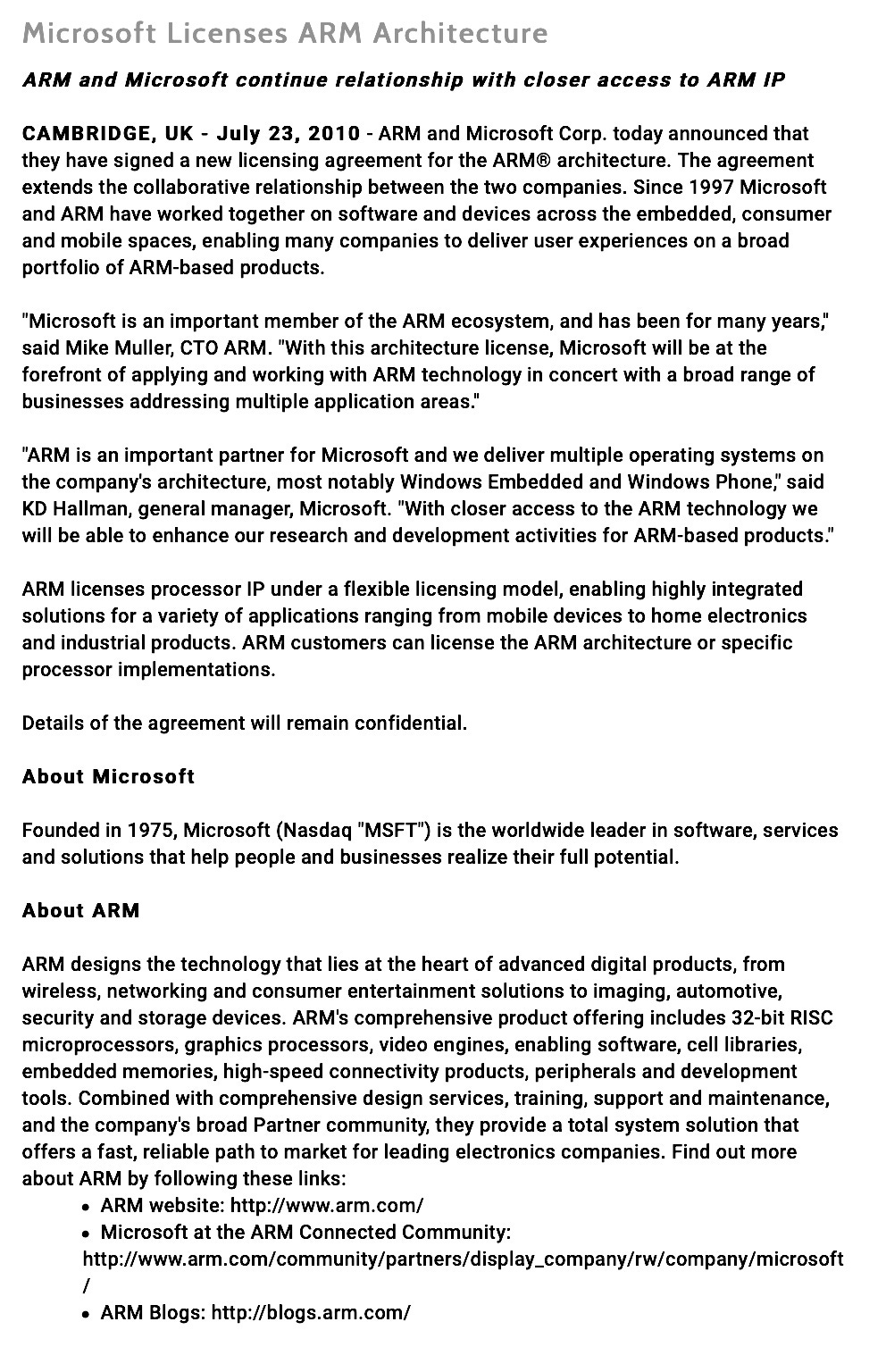

We called Windows running on ARM SoCs WOA, pronounced woe-ah, Windows on ARM. In July 2010, Microsoft also became an ARM licensee, just like Intel and all the mobile phone companies.

The difference between ARM SoC and Intel SoC gets to the heart of a computer science debate and innovation dating to the 1980s, and one of the biggest topics in systems groups when I was in graduate school. ARM chips, at the most basic level, were designed to be simple and leave complexity to the software layer with the belief that simplicity would ultimately yield better performance, power management, and lower cost. They were rooted in the ideas of reduced instruction set computing, RISC. The Intel SoC was rooted more in the idea that chips would continue to increase in transistors, doubling the transistor count every two years according to Intel founder Gordon Moore, and the best use of that was to enable chips to do ever more complex operations at the hardware level. Intel and AMD chips in general were known as complex instruction set computing, CISC, and even the Intel SoC was technically a CISC. There’s much more to this overly simplistic explanation or a ferocious debate and I offer two references in this footnote.1

The WOA efforts gave the core OS team a chance to rearchitect major subsystems of Windows to perform better, manage power better for longer battery life, consume less memory, and to be more secure. A significant part of the value proposition for porting to ARM was that Windows would make step function improvements in these areas—the vision for behaving much more like a consumer electronics device depending on the improvements to Windows that could only be done on ARM.

The basics of connectivity, disk storage, and memory were rearchitected as well, using technologies required by ARM designs—technology that took much less physical space because there were no sockets, plugs, or cables as a laptop might have—everything was packaged on one circuit board. In addition, the team engineered support for a whole set of new peripherals that PCs never had, like a compass or accelerometer (that’s how a device knows when it is rotated.) This work also applied to Intel-based PCs, which was kind of cool even though few, if any, devices would gain these peripherals any time soon.

Software engineers love a good porting project. Ports are finite, measurable, and every day is a day of progress, mostly. Porting Windows to ARM was a massive effort, but also a good deal of fun for the team. They built out a whole new lab and set of tools to test WOA hardware. They designed and built ARM motherboards that had enough connectors to diagnose performance and quality as well as to manage installing new builds of software and test at high volumes. At one point, we had hundreds of WOA circuit boards racked and stacked in the lab.

ARM Strategy Goals

The easiest product strategy would have been to simply complete the work and offer WOA as a unique Windows product much like Windows for RISC or Alpha, or even the 32-bit or 64-bit choices PC OEMs could make. As with any chipset, Microsoft would also provide tools so that existing Windows software could easily target the new chipset.

At the highest level, such a port seemed like a good idea. As if by magic, Windows would gain all the attributes of ARM, such as always-on mobile connectivity, cheaper components, extended battery life, and so on, plus all the existing benefits of Windows, such as Office, choice of hardware from many vendors, and even the vast library of existing software. Oh, if only magic were possible.

As it turned out, every constituency in the PC ecosystem had a different rationale for wanting WOA and a different view of what WOA could mean and deliver. Our job was to navigate what amounted to conflicting customer needs and desires. As one could easily imagine, the various perspectives collided and meant there would be tradeoffs that made each constituency unhappy in different ways. Our intent was certainly to deliver on the promises of ARM, but there was difficulty in delivering those along with the “plus” for three important reasons.

First, ARM devices were not architected like x86 devices. Normally, one could pop a DVD into a drive and install Windows. ARM devices, like smartphones and tablets, are built as a complete package of hardware and software. There are no separate operating systems for ARM devices due to the nature of ARM—the customization and choices made by device makers define unique devices. They then do the work in the OS to mate the device and OS together. Apple did this with the iPhone and the iPod and most recently the iPad. Smartphones from Nokia, Samsung, LG, and so on all do this work whether designing for Android, Symbian, or some variant of an OS. For Microsoft to deliver WOA as a product we would have to specify the ARM platform to a great degree.

That was different than the OEMs might have hoped for as they would have liked to source chipsets and other components from a variety of suppliers while treating Windows as just another component. ARM from any supplier was already cheaper than Intel, a major plus for OEMs. If one were to take a cynical perspective, OEMs simply wanted ARM as a point of leverage to negotiate with Intel over the price of x86 chipsets. For too long Intel held all the cards and even with AMD it seemed there was little leverage. Additionally, with multiple suppliers of ARM chipsets the OEMs saw an opportunity to negotiate even better terms by competitive sourcing just for ARM. While this sounds awesome, it would have been unmanageably complex to deliver WOA such that the choices of what hardware it would run on would be made by many independent OEMs. WOA hardware needed to be determined at the start of the project. The Android ecosystem took such an approach, and by and large customers are left with devices to wither without updates and security fixes, simply because the complexity of delivering them across the diversity of devices is overwhelming.

Second, from an end-user perspective WOA PCs should behave better not just differently than an x86 PC. Windows NT ran on several other chipsets, but all but x86 (and x64) failed because there was no value proposition unique relative to mass-market chips—software makers did not see the volume that would drive them to spend energy porting their own software, while PC makers did not see unique software to justify switching their supply chain. Our work on WOA was rooted in creating a unique value proposition. This was a lesson from the early support in Windows for different chipsets.

Third, the assumptions built into the Wintel model of hardware and software needed to be revisited. The Wintel PC assumed a wide-open hardware platform where anything could be inserted, plugged, soldered, or connected to a PC or laptop and along with that install any necessary software to make a peripheral function. The ability of any hardware to require device drivers or kernel mode software was the bane of the existence of Windows already and the requirement that Windows accept all such software was a recipe for continued challenges with security, reliability, and battery life. Everyone loves the flexibility, but the overall cost had become an inexcusable burden as PC usage expanded to a billion people.

Creating a next-generation platform with this extensibility from the start would bring these weaknesses to WOA. Why bring everything bad about a Wintel PC to this new hardware platform while at the same time reducing the potential to surface the good? This constraint creates a significant break from the way OEMs and customers thought of a PC. Whether Windows wanted it or not, computing devices were moving from a “break open the case and do what needs to be done” to a “sealed case” design as is common with most all consumer electronics.

WOA was not just an alternative chipset for Windows the way we saw 64-bits, RISC, or Alpha, but an entirely new way to think about building a computer platform.

Taking a step back, we wanted to reimagine what Windows could be. We knew we would always have x86 PCs and those would always support the existing body of applications, hardware peripherals, and the ecosystem that provided them. There was little life left in the PC ecosystem. The industry’s hardware innovation cycle was firmly planted in mobile—radios, sensors, cameras, rapidly improving processors, memory, and storage, and more. The PC ecosystem was essentially in legacy-support mode, vendors doing what they could to squeeze out costs and continue to win existing business, while trying to break into the high-volume mobile space.

Reading this today it is abundantly clear how the world has changed. Nearly all the innovation that expanded beyond memory, storage, and CPU happened and continues on mobile platforms. By and large, innovations in PC hardware now feed off what happens in mobile so long as the suppliers of both components and software can do the work for PCs. Nearly all organic PC innovation is driven by the market for mega-scale datacenter computers, which are very low volume and very high price. Quite simply, the market size for PCs is one fifth as large as the smartphone market in unit sales. PCs, while twice the size of the tablet market, do not benefit from smartphone innovations as do tablets.

The PC, however, was and remains woefully deficient in the very attributes that were so exciting with the latest smartphones. The list of smartphone attributes read somewhat like the list of all the shortcomings of laptops: long battery life, always connected to the network, mobile network connectivity, instant resume from standby, consistent performance no matter how many apps are installed, high-quality cameras, GPS, motion sensors, light sensors, identity sensors, and more. We summed this up as a consumer electronics level of experience, maintained over time.

An example of the contrast between ARM architecture and x86 architecture is the way an iPhone works relative to low power standby mode, something everyone knows has been historically one of the flakier aspects of a PC.

Phones made people accustomed to consumer electronics devices with very long standby and all-day battery life all while remaining connected to the network and able to light up to full use instantly when a new message arrives. By contrast, even in 2022 people walk around balancing unfolded Windows laptops out of a worry of losing work should the PC fail to resume successfully from standby with the lid closed.

The original iPad had 30 days of standby and 10 hours of usage. During that entire time the product continued to maintain a connection to the network to receive email, messages, notifications, and more. We take for granted the ability for the device to work instantly when we need it and to quickly blank the screen and dramatically reduce power consumption when we don’t, reliably and without question.

Building this type of feature takes work at every single layer of the system from the chipset up through the OS and developer platform to the apps. It doesn’t “just work” because of a change in chipsets. In the case of Windows, the variation across the ecosystem makes this even more intractable when considering what might be plugged into the PC or what components and supporting code the OEM might have chosen to use.

Our goal was to infuse Windows with these attributes so that new Windows 8 devices and future devices could have the qualities of smartphones and tablets that consumers were experiencing.

We did not set out to have lower-priced devices, to find competitive leverage against Intel, to make better Netbooks, or to simply have more choices to do exactly what Windows already did perfectly well with Windows 7.

The job of engaging the OEMs to achieve these goals was non-trivial.

Steve and Steven Call Intel

We had to break the news to Intel that we were working on moving Windows to their architectural rival—the one that beat out Intel for the iPhone just after the success of winning Apple as an Intel customer for Mac. We planned on an event billed as a “technology demonstration” at CES in January 2011.

This was earth-shattering, geopolitically significant news. Some would see it as, essentially, a fracture of the Wintel relationship even though in practice we were just expanding Windows as we had always done, just as Intel supported Linux. It would be like McDonalds breaking up with Coca-Cola to switch to Pepsi, though more realistically simply adding Pepsi and offering choice. An entire industry was built on the Wintel partnership. For our part, we were confident that for the foreseeable future we would not only be supporting but actively building Windows 8 for x86 devices. That’s why this was different than the Osborne effect. It is also why Apple had a much easier time in their past chip transitions because they were fully moving to the latest chips. We indeed planned to run with both x86 and ARM at the same time, for Windows, and recognized how difficult this might be.

In mid-December 2010 as we prepped for CES in January, we huddled around the low coffee table in SteveB’s office, eyes fixed on the Polycom, and Steve called Intel CEO Paul Otellini.2 At Intel his entire career, Paul joined the company in 1974 straight out of graduate school. He rose through the ranks to become Intel’s first non-engineer CEO, a position he held for more than five years. Paul was always polite and gracious in our meetings, which made things even more difficult. Our investment in ARM would prove a significant strain in the near term.

Steve took the lead, of course. This was not a technical conversation but about the partnership.

He said we had “some news.”

“Paul,” he continued slowly and deliberately, “you’re not going to view this favorably.”

Steve told Paul we’d made the decision that in addition to Intel we would target ARM chips with the next release of Windows and that we would be doing a technology demonstration at CES in January 2011, just a few weeks away. We would talk about Windows support for ARM without doing a full Windows 8 reveal.

Steve reiterated that Intel would continue to be our primary target and that we planned on the vast majority of PCs to be Intel-based PCs still. I chimed in with some words about ARM being the focus for SoC efforts for Windows and that Intel’s SoC was also a target. Our announcement would be about SoC, not exclusively ARM, and would feature Intel SoC products. This would scarcely soften the news.

Paul was quiet for a bit. Steve had delivered the news perfectly. Steve and I kept looking at each other waiting for a response. Seconds seemed like minutes.

Finally, we got one.

At first, Paul said Intel’s SoC would be more competitive, and we didn’t have to do the work to support ARM. Then he asked two questions. “Would the ARM chips would be executing any Intel instructions?” In other words, would ARM be emulating Intel, thus making it compatible with Intel? There might have been several reasons he wanted to know this, but I would imagine protecting Intel’s intellectual property was top of mind with this question. It was irrelevant because we had no intention of trying to achieve Intel compatibility—it would not have worked given the state of the art and our strategy. Intel was rather protective of attempts to emulate or reverse engineer their x86 instruction set and remained sensitive to how Microsoft reduced their influence during the transition to 64-bits.

Second, Paul asked about Office and what our plans were there. He knew that Office was the most strategic product for Microsoft on traditional PCs, and he also knew that if Office were part of the equation, then software vendors would be taking a lead from Microsoft. We knew this as well. Steve said we didn’t have an answer as we were still working on our plans for Office. This was true and, in fact, the plans for Office were still an internal issue and a frustrating one for me.

I had the role to close out the call outlining precisely how we planned to show Intel SoC at the event and how we would be showing existing Windows applications on the Intel SoC as a key differentiator of Intel’s efforts. He seemed pleased with that and offered to help us. We always included the Intel SoC, the ATOM product, even though it lacked many of the qualities we saw in ARM-based chipsets.

Days later, I flew to Intel’s headquarters and visited with David “Dadi” Perlmutter, leader of the Intel Architecture Group, essentially Intel’s core chip business, and a longtime Intel employee and rumored CEO successor to Paul, thus making us rumor-mill equivalents. Dadi was always fantastic to work with as we could bond over challenges we each faced in our efforts while recognizing the overall complexity of the equation. We had a cordial meeting. He offered up many technical issues we might face, pointing out the fact that Intel had deep knowledge of ARM as one of the earliest licensees of the architecture. He too was focused on application compatibility and, while he had been briefed, was satisfied to hear everything again firsthand.

In many ways the call and visit were the culmination of both supreme technical work on the team and of the most incredibly difficult product choice I ever had to communicate. The relationship between Intel and Microsoft formed the cornerstone of the PC era—a partnership and set of personal relationships that compared to any in business history. The relationship always had some tension—the kind of tension seen between hardware and software since the start of computing. My feelings alternated between betraying a business partner and relief that we could move computing forward, depending on the moment. Ultimately, for Microsoft to be relevant in operating systems it needed to rely on new hardware.

That said, Intel had similar feelings and had simply executed them more openly. For Intel to be relevant it needed a much more concerted effort to woo our competitors: Linux in the data center and Apple PCs. Going way back, my very first meeting at Intel with BillG, Andy Grove, Gordon Moore, and others was one where the main topic of conversation was how openly and aggressively Intel was courting Java developers. This was a time when Microsoft viewed Java, a cross-platform tool, as competitive to Windows, while Intel viewed it as a slow platform that would benefit from faster processors.

![The Register® Biting the hand that feeds IT {* SOFTWARE *] Intel: Microsoft's ARM-on-Windows deal no threat 'Bring it on!' Sat 8 Jan 2011 // 06:11 UTC 56¢ GOT TIPS? Rik Myslewski BIO EMAIL TWITTER ^ SHARE CES 2011 Microsoft may have caused a disturbance in the PC Force with its announcement that the next version of Windows will run on ARM processors, but Intel isn't worried. "You want to come and party in our kitchen and rattle the pots and pans? I've got Sandy Bridge. Bring it on," Intel spokesman Dave Salvator told The Reg on Friday at the Consumer Electronics Show in Las Vegas. Before that pugnacious pronouncement, however, Salvator tried to minimize the shock value of Microsoft's announcement. "To some degree, it's not really news," he said. "We've had Windows on ARM for years. It's called Windows CE." When reminded that Windows CE was not exactly the most impressive or powerful mobile operating system, Salvator admitted: "Well, that's why they call it 'WinCE'." Then he gave the "no news" dodge one more try. "When you think about it, Windows 7 Phone, that's running on ARM." When we pointed out that Microsoft's smartphone OS is an entirely different animal than Windows for PCs, Salvator countered: "Fair enough - but it is Windows on ARM. Granted, we're into a little bit of semantics, but that's the marketing name - but [not] architecturally." Feints completed, Salvator chose a more logical argument. "If vou look at the complexity of the Windows kernel." he said. "if vou look at the complexity of the Windows 7 operating svstem. if you look at the performance demands of that operating system - frankly, I welcome [the announcement]. "Because here's the thing: when people talk about ARM, they tend to point immediately to power: 'Oh, it's all about power, they do really great power'." Yeah, that's true, they have pretty good low power, but with Moorestown, frankly, so do we." Being Salvator's polite guest, we didn't point out that Moorestown barely dips its toes inside the power envelope for smartphones. From Salvator's point of view, creating an ARM-equipped device that could compete with a PC would negate ARM's power advantage. "For them to really come and do anything serious that would go into into a bona fide PC device and deliver a full-fledged PC platform, they're going to have to do some different things ... like considering things like an out-of-order processing execution engine, going multi-core, and cranking up their clocks." Dual-core ARM chips do already exist - Motorola's new Xoom tablet has one, for example. Also, Intel's netbook processor, Atom, is an in-order processor, one of many architectural choices that limit its performance to far less that that of its "full-fledged PC" brethren in the company's Core line. Salvator pointed out that if an ARM chip were to be built whith those full-fledged upgrades: "There's some immutable laws of physics that say that if you do those things your transistor count is going to go up, so is your voltage, so is your heat, so is your power. So this miraculous power advantage that everyone seems to think is [ARM's] ace in the hole completely evaporates." Salvator noted that some observers say that Windows-on-ARM will be a competitor to full-fledged PCs, but he's not buying it. And when asked if ARM could be an Atom competitor in the lower rungs of the PC market however, he had a story to tell. "Let me give you one quick anecdote," he said, which he titled Aventures in Mispositioning. "When we first shipped Atom, a couple of clever system makers thought that they could basically take a chassis like that one" - he pointed to a plain-vanilla 15-inch laptop - "put an Atom inside it and call it a notebook, and sell it, and try to capture a premium for it sort of between the typical netbook and notebook price points. And they really kind of patted themselves on the back and said, 'Aren't we clever'. Until the returns started. Because people brought it back, saying: 'You know, I bought this expecting the full-fledged PC experience, but I didn't get it'." To Salvator, it's all about the experience. "Historically," he said, at Intel, what we build are phenomenal engines. But the company thinking has historically been ... inside out: 'build a great engine and they will come'." To be a success in the consumer market, according to Salvator, a device maker has to sell the experience, not just the engine. "Historically, we have not architected experiences. That's what Apple has done brilliantly. That's what anyone who does a good [consumer electronics] device has done well. They have to build the right solution - they need the right ASICs, they need the right softwre, they need the right knobs, they need the right plumbing - but what they ultimately need to think about, if they're selling to consumers, is 'I have to deliver a phenomenal experience - there has to be a joy associated with ths device, or it will fail' " Salvator says that there is "an interesting transition that we're going through inside of Intel," and that transition is to focus on the consumer experience, and not merely the hardware behind it - hence the video-centric rollout of the Sandy Bridge 2nd Generation Intel Core processors earlier this week. But as important as the consumer experience might be, he said, "Behind every great experience is great performance. And behind every great performance is a great engine." In Salvator's view, ARM may be just fine for such consumer experiences as those provided by Apple's iPhone or any one of a squillion Android smartphones, but at low power-consumption levels it can't have the oomph to provide what customers expect when it comes to a Windows-based machine. He illustrated that contention using the example of today's near-ubiquitous HD video. 'Create' is becoming the new 'consume'," he said, "and to have a great creation experience, you need to have great performance, and to have great performance, you need a great engine." And that's not going to be an ARM processor running Windows, according to Salvator. ® https://www.theregister.com/2011/01/08/intel_shows_no_fear_of_windows_arm_deal/ The Register® Biting the hand that feeds IT {* SOFTWARE *] Intel: Microsoft's ARM-on-Windows deal no threat 'Bring it on!' Sat 8 Jan 2011 // 06:11 UTC 56¢ GOT TIPS? Rik Myslewski BIO EMAIL TWITTER ^ SHARE CES 2011 Microsoft may have caused a disturbance in the PC Force with its announcement that the next version of Windows will run on ARM processors, but Intel isn't worried. "You want to come and party in our kitchen and rattle the pots and pans? I've got Sandy Bridge. Bring it on," Intel spokesman Dave Salvator told The Reg on Friday at the Consumer Electronics Show in Las Vegas. Before that pugnacious pronouncement, however, Salvator tried to minimize the shock value of Microsoft's announcement. "To some degree, it's not really news," he said. "We've had Windows on ARM for years. It's called Windows CE." When reminded that Windows CE was not exactly the most impressive or powerful mobile operating system, Salvator admitted: "Well, that's why they call it 'WinCE'." Then he gave the "no news" dodge one more try. "When you think about it, Windows 7 Phone, that's running on ARM." When we pointed out that Microsoft's smartphone OS is an entirely different animal than Windows for PCs, Salvator countered: "Fair enough - but it is Windows on ARM. Granted, we're into a little bit of semantics, but that's the marketing name - but [not] architecturally." Feints completed, Salvator chose a more logical argument. "If vou look at the complexity of the Windows kernel." he said. "if vou look at the complexity of the Windows 7 operating svstem. if you look at the performance demands of that operating system - frankly, I welcome [the announcement]. "Because here's the thing: when people talk about ARM, they tend to point immediately to power: 'Oh, it's all about power, they do really great power'." Yeah, that's true, they have pretty good low power, but with Moorestown, frankly, so do we." Being Salvator's polite guest, we didn't point out that Moorestown barely dips its toes inside the power envelope for smartphones. From Salvator's point of view, creating an ARM-equipped device that could compete with a PC would negate ARM's power advantage. "For them to really come and do anything serious that would go into into a bona fide PC device and deliver a full-fledged PC platform, they're going to have to do some different things ... like considering things like an out-of-order processing execution engine, going multi-core, and cranking up their clocks." Dual-core ARM chips do already exist - Motorola's new Xoom tablet has one, for example. Also, Intel's netbook processor, Atom, is an in-order processor, one of many architectural choices that limit its performance to far less that that of its "full-fledged PC" brethren in the company's Core line. Salvator pointed out that if an ARM chip were to be built whith those full-fledged upgrades: "There's some immutable laws of physics that say that if you do those things your transistor count is going to go up, so is your voltage, so is your heat, so is your power. So this miraculous power advantage that everyone seems to think is [ARM's] ace in the hole completely evaporates." Salvator noted that some observers say that Windows-on-ARM will be a competitor to full-fledged PCs, but he's not buying it. And when asked if ARM could be an Atom competitor in the lower rungs of the PC market however, he had a story to tell. "Let me give you one quick anecdote," he said, which he titled Aventures in Mispositioning. "When we first shipped Atom, a couple of clever system makers thought that they could basically take a chassis like that one" - he pointed to a plain-vanilla 15-inch laptop - "put an Atom inside it and call it a notebook, and sell it, and try to capture a premium for it sort of between the typical netbook and notebook price points. And they really kind of patted themselves on the back and said, 'Aren't we clever'. Until the returns started. Because people brought it back, saying: 'You know, I bought this expecting the full-fledged PC experience, but I didn't get it'." To Salvator, it's all about the experience. "Historically," he said, at Intel, what we build are phenomenal engines. But the company thinking has historically been ... inside out: 'build a great engine and they will come'." To be a success in the consumer market, according to Salvator, a device maker has to sell the experience, not just the engine. "Historically, we have not architected experiences. That's what Apple has done brilliantly. That's what anyone who does a good [consumer electronics] device has done well. They have to build the right solution - they need the right ASICs, they need the right softwre, they need the right knobs, they need the right plumbing - but what they ultimately need to think about, if they're selling to consumers, is 'I have to deliver a phenomenal experience - there has to be a joy associated with ths device, or it will fail' " Salvator says that there is "an interesting transition that we're going through inside of Intel," and that transition is to focus on the consumer experience, and not merely the hardware behind it - hence the video-centric rollout of the Sandy Bridge 2nd Generation Intel Core processors earlier this week. But as important as the consumer experience might be, he said, "Behind every great experience is great performance. And behind every great performance is a great engine." In Salvator's view, ARM may be just fine for such consumer experiences as those provided by Apple's iPhone or any one of a squillion Android smartphones, but at low power-consumption levels it can't have the oomph to provide what customers expect when it comes to a Windows-based machine. He illustrated that contention using the example of today's near-ubiquitous HD video. 'Create' is becoming the new 'consume'," he said, "and to have a great creation experience, you need to have great performance, and to have great performance, you need a great engine." And that's not going to be an ARM processor running Windows, according to Salvator. ® https://www.theregister.com/2011/01/08/intel_shows_no_fear_of_windows_arm_deal/](https://substackcdn.com/image/fetch/$s_!_J9p!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F2dd6624f-8c32-4b2a-84d8-eebfbf3cae03_1994x2238.jpeg)

Over the coming months Intel would aggressively counter our work on ARM. Public statements were clear and pointed and one could only imagine how the conversations went with their partners in private. Business can be brutally competitive. From CES through the release, Intel was on the field battling against Windows on ARM. They had to. We expected them to. Still, it was not helpful. In hindsight, I might even suggest they were successful in convincing OEMs of their perceived downsides of ARM. All is fair in business even with the best of partnerships. It was Andy Grove himself who used the term coopetition in his thoughts on the industry.3

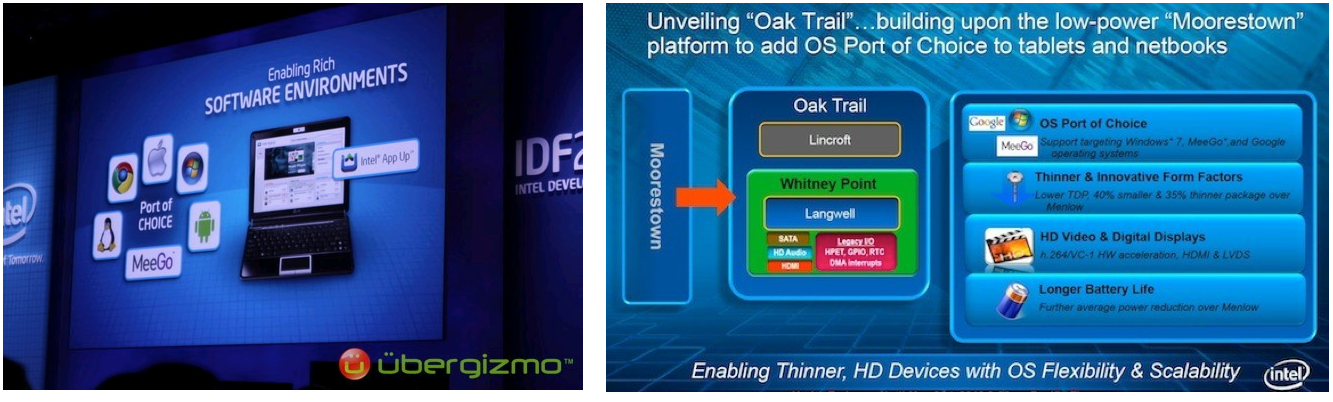

One reaction from Intel was to further emphasize that Intel chips supported a variety of operating systems. In one slide we kept seeing pop up at Intel events, the company emphasized this by putting the Windows logo in a tiny box with Google and MeeGo (a Linux distribution) with the title “Unveiling ‘Oak Trail’ building upon the low-power ‘Moorestown’ platform to add OS Port of Choice to tablets and netbooks.” Another variant of the slide included every operating system in use at the time, including Apple. Putting Windows on par with these offerings and using the phrase “port of choice” definitely rubbed me the wrong way. All is fair in this type of competition, honest.

Once Intel was fully briefed, we were ready to head to CES where SteveB would lead the show with a keynote. We chose to have a separate event with just the press and our key ARM partners to be able to offer Q&A and a to be able to see the demonstrations in a smaller venue.

CES 2011 “Technology Demonstration”

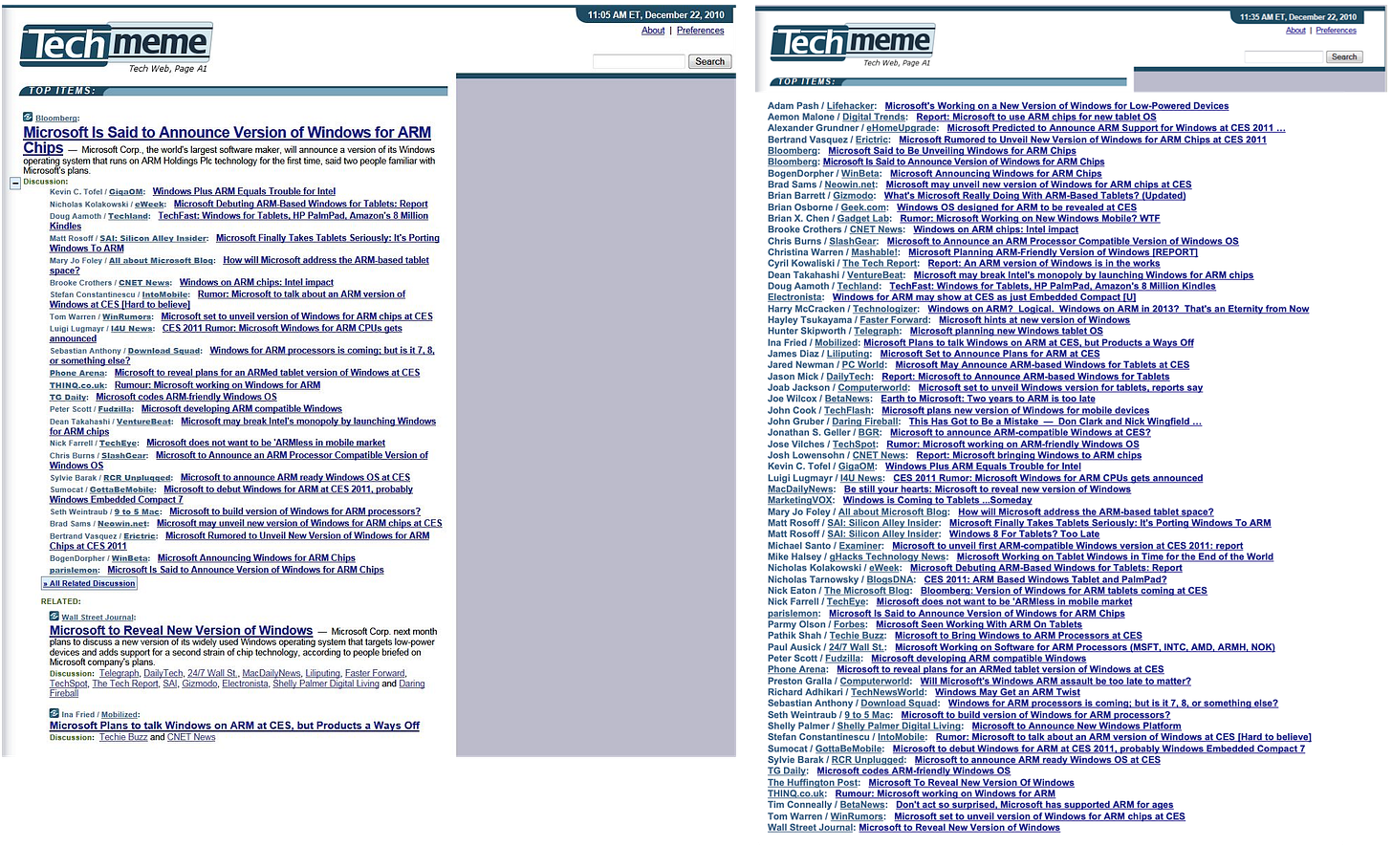

There was no way around it, but the coverage of Windows embracing ARM would be seen through the lens of the implications for the Wintel partnership. Additionally, it would be seen as a failure for Wintel to secure a market position in tablets, the hugest of huge new categories created by Apple and now overrun with Android. This was the kind of event we had to hold after the stock market closed because some would overreact and think of a technology demonstration as tradeable news.

Mike Angiulo (MikeAng) and the ecosystem team created a showcase for the technology and partners behind ARM. This was the kind of event where the primary goal is to prove to partners we are committed to the work by making a public statement. We would work in the background to gain enough support so the partners at least agreed to be named, which would be an accomplishment. We’re on stage but the success of the event looks like a slide with all the partner logos, which the team delivered. The team built out all the demonstrations which were, to put it kindly, in a very fragile state. This was one of those big tables of devices on display where we couldn’t even have backups because there was literally only one device, often a test breadboard complete with wires hanging off. Amazing work!

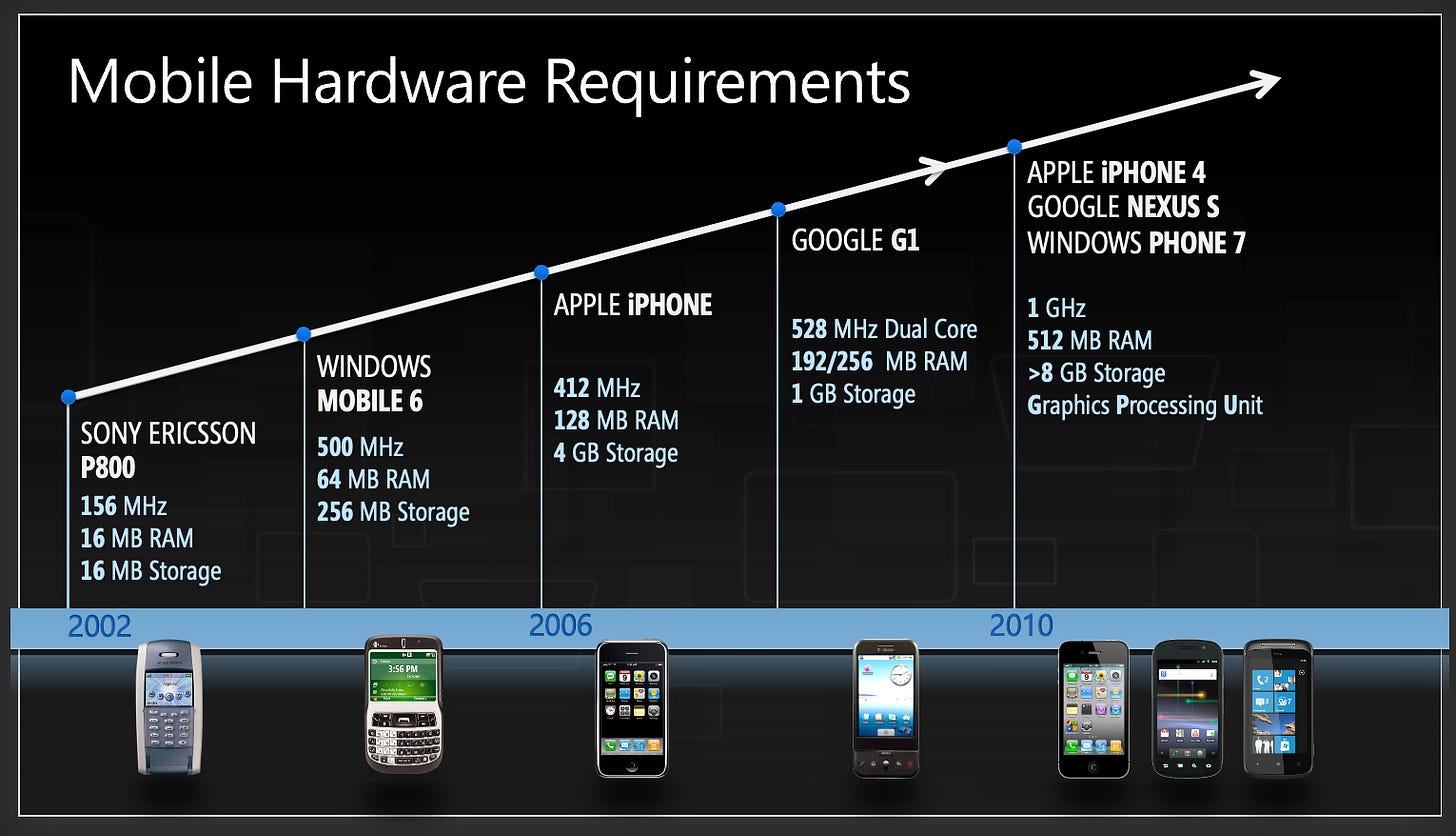

We began by showing how hardware on SoC (ARM) mobile platforms had improved. I put up a chart showing how Windows had doubled system requirements every release from Windows 3.1—twice as much disk space and twice as much memory from 1992 to 2006. The takeaway was that phones and PCs had converged in hardware capabilities, and phone hardware was improving faster—in other words while Windows had finally leveled off in hardware specs, smartphones basically caught up to PCs in capabilities.

As we worked through the computers running Windows on ARM we showed a number of significant innovations—things that did not work on phones or tablets…yet. Mike showed ARM devices using USB storage, printing, and connecting to external displays. While these were mundane tasks for a PC, they were the kinds of basic features not supported on the iPad or Android tablets. We were tapping into the breadth of capabilities in Windows.

SoC was the driver for the innovation, bringing along with it benefits such as integration of many peripherals not available on PCs, lower power consumption, no loud fans to cool the chips, and much smaller devices. By showing off SoC devices running Windows and Office, complete with printing, USB storage, and good graphics, we made it incredibly clear that Microsoft was dedicated to bringing ARM to market. MikeAng planned and executed the whole show, including working with his team and aligning partners. To say it was masterful in the context of a wild announcement no one was expecting would be an understatement.

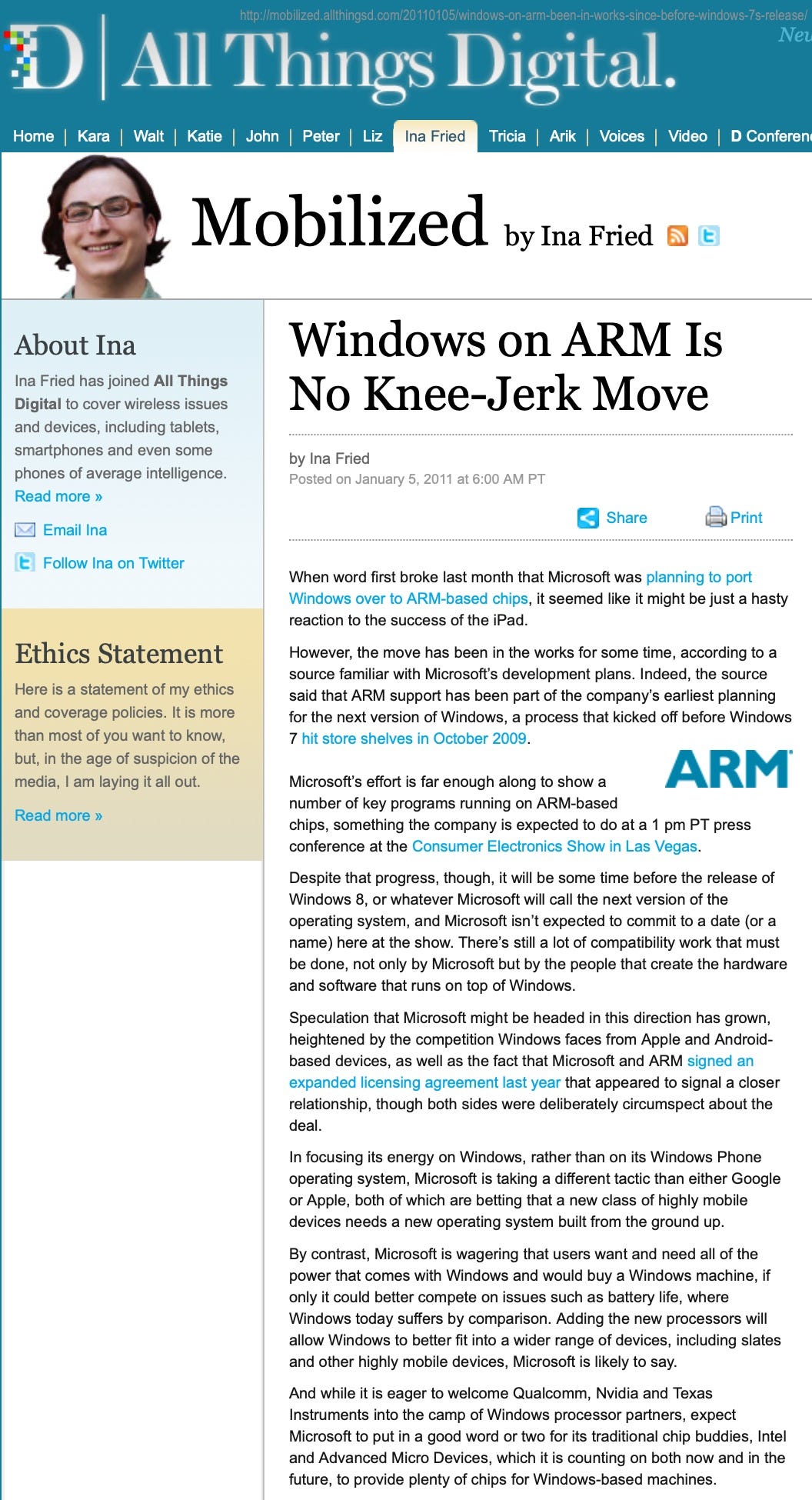

The press coverage of the event was worldwide and spanned the technology and business news. This was a time that a leak about the contents of the event probably led to more interest. As expected, there was a focus on the implications for Intel. The coverage was anchored by the huge showing of Android tablets at CES and the success in the first months of the iPad. Ina Fried, reporting for the All Things Digital outlet, had a detailed live blog of the event covering minute by minute what was shown. Fried also had a follow up story that ran with the headline “Windows on ARM Is No Knee-Jerk Move” pointing out how long the work had been underway4

Later in the evening SteveB held his much broader keynote including Xbox, Windows Phone, and a nod to SoC where he said “Whatever device you use, Windows will be there.”5

Still, CES 2011 had been rather challenging or even lackluster for Microsoft overall. The main keynote the company delivered was primarily about the 2010 wave of products. Xbox was making big news with Kinect but there was a dearth of Kinect-enabled games. Android totally dominated phones and pushed aside what presence Windows Phone 7 could muster just months after launch—Android’s open source strategy (free OS software) and anything-goes hardware drove a broad range of low-cost smartphones and connected devices of all types. Plus, we were approaching two years since Windows 7 and there were still no laptops to compete with the MacBook Air, which had already been updated. The PC OEMs were not showing many Windows 7 tablets or touch PCs. Instead, they had turned their focus to Android tablets.

One of the biggest changes in the computing device landscape was underway and one that went largely unnoticed. While there is ample attention paid to the software battle for computers—meaning personal computers, phones, and now tablets—between Microsoft, Apple, and now Google, there was also a battle across the manufacturers of devices. There was a changing of the guard underway in making computers as the leaders of the PC era such as HP, Dell, and Lenovo, were losing to the new makers of phones from Apple, Samsung, HTC, and a host of new manufacturers and ODMs in China that never made PCs. The tech blog BGR tracked over 100 different tablets debuting at CES 2011 and while some traditional PC makers were on the list, they were overwhelmed by manufacturers mostly new to computing.6

From my vantage point, this only raised the urgency of Windows 8, specifically the pressure to deliver a new PC experience. Noteworthy was the increasingly gloomy PC sales numbers that continued to roll out.

Those sales numbers were announced after CES. Smartphones surpassed PCs in units sold in the first quarter of 2011. PC sales declined almost 2 percent year-over-year. Analysts who predicted almost 500 million PCs were declaring the death of the PC, and that we had passed Peak PC. PCs, they reported, had been supplanted by tablets like the iPad.7

Android tablets had risen from hacked prototypes a year earlier to dominating the show floor, even as the spirit of Apple dominated CES. Google was releasing Android builds at a furious and incomprehensible pace. Even support for tablet sized screens was uncertain as the cleverly named releases on the roadmap were added and features moved from one release to another, from Android 2.3 Gingerbread just released before CES but unavailable on tablets to Android 3.0 Honeycomb, which forked the code to support tablets only to become Android 4.0 to Android 2.4 Ice Cream (Sandwich) and more. Or something like that—no one could keep track.

For the next year, behind the scenes we continued to develop WOA with OEMs. It was a constant push and pull with OEMs. The OEMs had challenges reconciling their conflicting goals—they wanted ultra-cheap, low-priced, mass-scale devices that could utilize their supply chains. We were hoping the OEMs would join us in a new category of device—a more capable tablet.

Engaging OEMs

MikeAng’s PC ecosystem team developed a strategy to engage the OEMs on WOA projects that at once constrained the variations in hardware WOA supported, while also enabling OEMs to define unique innovations they brought to market.

The OEMs were not happy with constraints to say the least.

The assumption from just about every ecosystem constituent would be that Windows would do the work to implement Windows on ARM and the resulting product would maintain all the properties of x86-based Windows. OEMs would then build PCs out of ARM or x86 as they saw fit. New ARM-based PCs would presumably have all the software capabilities and compatibility of x86 Windows plus all the positive properties of ARM appropriate for small tablets, such as low cost and long battery life.

As nice as this sounds, such an end-state was impossible. The investment in ARM would only make sense if the ecosystem could rally around a new value proposition. We did not need another Netbook. We certainly did not need the confusion of two different chipsets that did the same thing only slightly differently in a seemingly random set of ways.

It was going to be remarkably difficult no matter how we approached the situation. On the one hand, building the first WOA devices would be technically demanding and require a significant up-front commitment from all parties. At the same time, the strategy for WOA would not immediately resonate with OEMs who traditionally think first about margins, assortment, segmentation, and minimizing investment to achieve those given their margin structure.

We saw WOA as a new type of intellectual property from Microsoft with a new go-to-market. Such thinking was not exactly welcomed by our established partners (and best customers). It was the fact that these were our customers that made this even more difficult.

This plan involved working with the core OS team to define ARM hardware choices that worked with Windows, defining the specifications for hardware including the chipset maker and all the associated peripherals. Essentially, we defined three different ARM base platforms—each unique in what it could provide consumers. Mike and team worked close with three ARM chip makers, each viewed as a primary partner for WOA including Qualcomm, Texas Instruments, and NVIDIA. For example, Qualcomm offered great connectivity in their ARM products. Texas Instruments had an array of sensors and a long history of low-power ARM devices. The NVIDIA Tegra platform had the best performing graphics, expected given their expertise. Within each platform there was flexibility, and in deciding the platforms we engaged OEMs to include their ideas as well.

For each platform, we determined if there was OEM interest in building a PC and working with them on the specifics of the PC—determining if it was to be laptop, convertible, or slate form factor, what size screen, collection of external connectors, and so on. The strategy was designed to provide a lineup of ARM devices from the day we launched Windows 8, while at the same time avoiding the customer confusion and channel complexity that might result from having essentially identical devices from different OEMs. Where Intel aimed for volume from roughly identical Netbooks, we aimed to have higher quality and differentiated devices.

The worry that led us to this approach was that OEMs would simply repackage Netbooks, with their low-quality screens, plastic cases, and commodity manufacturing, to represent WOA innovation. Low-price and keyboardless represented their default point of view when it came to ARM. Since we had a new platform and surmised that investment would be relatively minimal, we suspected OEMs would want to reuse as much of an existing design as possible. We wanted WOA to stand for a reimagined, higher-quality, and modern Windows, not a cheaper, less capable, subset.

OEMs were receptive to the plan even if they did not see the problems with Windows we wished to address as acutely as we did. Still, they immediately saw ARM as an opportunity to cut Intel out of the equation. They wanted to know how much cheaper WOA was relative to Intel Windows—in other words, their perspective was immediately focused on providing even lower cost PCs.

Our concerns were realized early, but at least remained predictable. There was immediate pushback over the constraints within the system. The limits in their flexibility and requirements were viewed as a sign of distrust. Much to our surprise, however, the OEMs seemed more circumspect when it came to our own execution. Based on years of experience, they had many reasons to doubt we would even deliver, which granted was entirely fair. While they never directly stated it, it was clear from body language that some believed maybe we had ulterior motives for WOA, perhaps to gain leverage over Intel or something. I found this frustrating as we only wanted to deliver on a new Windows value proposition. Given our recent history, their feelings and doubts were understandable.

Over the course of executing WOA we would have many ups and downs delivering multiple devices involving multiple silicon partners working with multiple OEMs. We saw firsthand the difference in how partners approached or believed in the opportunity and how their organizations reacted to what we certainly believed was an attempt at building our own disruptive innovation.

Qualcomm for example was highly tuned to running experiments with OEMs. That was great. At the same time, their process relied on essentially sizing the market early on and introducing measured involvement based on the perceived run-rate of a device. They also had their own partner ecosystem and were anxious to reward good partners with a WOA opportunity even if that partner didn’t have a deep interest in bringing a device to market. Windows Phone already partnered with Qualcomm which proved to send a mixed message about unit volumes. Our process for PCs paired one OEM with Qualcomm whereas Windows Phone had many OEMs. Qualcomm was more interested in working with many OEMs. These are just some of the complexities that come from three or more decabillion dollar companies partnering. We were all used to it, even if it wasn’t great.

At the other end of the enthusiasm spectrum was NVIDIA. At NVIDIA, Jensen Huang, the founder and CEO, took a personal interest at every step and while he certainly didn’t need to, he continued to prove why he is one of the industry’s most visionary and uncompromising innovators. While walking the show floor of CES 2011, Jensen saw me and pulled me into the NVIDIA booth to express his personal excitement. He said, “we’re going to nail this partnership and create a whole new category of device.” He was excited, and his enthusiasm was infectious and so welcome. The NVIDIA booth was also jam-packed with their best-of-show Tegra products used in a variety of devices. ZDNet in their “CES winners and losers” roundup went as far as to suggest “NVIDIA looks like it could become the Intel of the next great wave of computing.” Everything about working with NVIDIA was impressive, including the technology.

In that same CES roundup, ZDNet said of “loser” Microsoft, “This is beginning to sound like a broken record, but I'm still waiting to hear any hint of Microsoft's vision of where it sees the computing world headed in the decade ahead and how Microsoft plans to take us there.”8

8,617 Words, Describing WOA

In February 2012 a year after the CES announcement we published “Building Windows 8 for the ARM Processor” on our Building Windows 8 blog, b8. We had already unveiled the Windows 8 user experience and held the first developer conference, but work on ARM, particularly the new hardware took more time. It was time to be clear with the market with about six months to go before RTM.

8,617 words.9

That’s what it took to describe the technology under the hood to reimagine Windows for a SoC chipset, primarily ARM. The post was so long and overwhelming that among the PC press it remains a meme for lengthy writing and cemented my own reputation as a writer of many words (as if the hundreds of thousands of words herein were not enough.) The post was almost three years in the making when we consider when the work initially began.

The detail provided was, intentionally, overwhelming. In sticking with promise and deliver, what we communicated were our commitments and what we would deliver. The post covered working with partners, apps for ARM computers, the hardcore details of rearchitecting Windows for ARM, even how we tested WOA, and then finally how WOA PCs will make it to market. Yet there were still questions. Yikes. It is easy to say it was my fault for not providing enough information, but it would be more reasonable to say that this is just part of the difficulty of changing assumptions of a massive market that established decades of precedent.

The tech blogs were anxious for information. At CES 2012 we provided little by way of update which was probably a tactical error given it was the announcements at the previous CES. This led to all the coverage taking a tone of “finally.” There were over three dozen stories anchored off of the B8 post listed on the industry site Techmeme. The comments and questions ran the gamut.

AnandTech’s Andrew Cunningham, however, highlighted the main news items as effectively as any outlet using their in-depth analysis as we’d come to expect.10

Up front there was, finally, clarity on the notion that WOA would come to market differently than traditional Windows for x86 processors recognizing what most had previously assumed but was not the case. Cunningham wrote:

Up until now, we've operated under the assumption that a new version of Windows called Windows 8 would be released this year, and that it would run on both x86 (32-bit and 64-bit - throughout this article I'll use x86 to refer to both architectures) and ARM processors - Sinofsky's post makes it clear that the ARM version of Windows, officially referred to as Windows on ARM (WOA), is considered to be a separate product from Windows 8, the same way that products like Windows Server and Windows Embedded share a foundation with but are distinct from Windows 7. Windows on ARM has a "high degree of commonality" and "very significant shared code" with Windows 8 - much of the user's interaction with the OS will be the same on either platform, and much of the underlying technology we've seen in our Windows 8 coverage so far will be present in both versions, but they're distinct products that will be treated differently by Microsoft.

Then came the two main questions that were answered but now the debate would really begin in earnest. First, to the question of the “desktop” that is the traditional Windows desktop interface and applications requiring a mouse versus touch. In the next posts, I will describe the new touch interface and application model, but for now all one needs to know is that new applications were called “Metro” or “Metro-style,” and the name of the API layer was known as WinRT. Important to this context is that the press and most technologists viewed tablets as entirely separate from PCs and the future. Even Steve Jobs had made that point. As we will see we did not design the product to have that distinction. The article continued: